EU Parliament passes landmark AI Act — here’s what to expect

The EU Parliament approves the world’s first comprehensive AI regulations, aiming for safe and ethical AI development in the European Union.

The European Parliament granted final approval to the European Union’s artificial intelligence (AI) law — the EU AI Act — on March 14, which marks one of the world’s first set of comprehensive AI regulations.

The EU AI Act will govern the bloc of 27 member states to ensure that “AI is trustworthy, safe and respects EU fundamental rights while supporting innovation,” according to the EU Parliament’s website.

According to the announcement, the legislation was endorsed by a vote of 523 in favor. There were 46 votes against and 49 abstentions.

Cointelegraph attended a virtual press conference before the voting took place, during which EU Parliament members Brando Benifei and Dragos Tudorache spoke to the press, calling it a “historic day on our long path to regulation of AI.”

Benifei said that the final result of the legislation will help create “safe and human-centric AI” with a test that “reflects the EU parliament priorities.”

The legislation was first proposed five years ago and started picking up speed over the last year as powerful AI models began to be developed and deployed for mass use. Parliament reached a provisional agreement after what Benifei called “long negotiations” in December 2023, and then the Internal Market and Civil Liberties Committees voted 71-8 to endorse the provisional agreement on Feb. 13.

As lawmakers lock in their final votes today, Tudorache commented, saying that:

“As a Union, we have given a signal to the whole world that we take this very seriously… Now we have to be open to work with others… we have to be open to build [AI] governance with as many like-minded democracies.”

AI Act in Action

After today’s action, minor linguistic changes will be made during the translation phase of the law, when EU laws are translated into the languages of all member states.

The bill will then go to a second vote in April and be published in the official EU journal, likely in May, according to a report from EuroNews.

In November, any bans on prohibited practices will begin to take effect. According to Benifei these will be mandatory from the time of enactment. Aside from prohibited practices, Benifei clarified that “at the beginning, it will not be fully mandatory. There is a timeline.”

What’s affected?

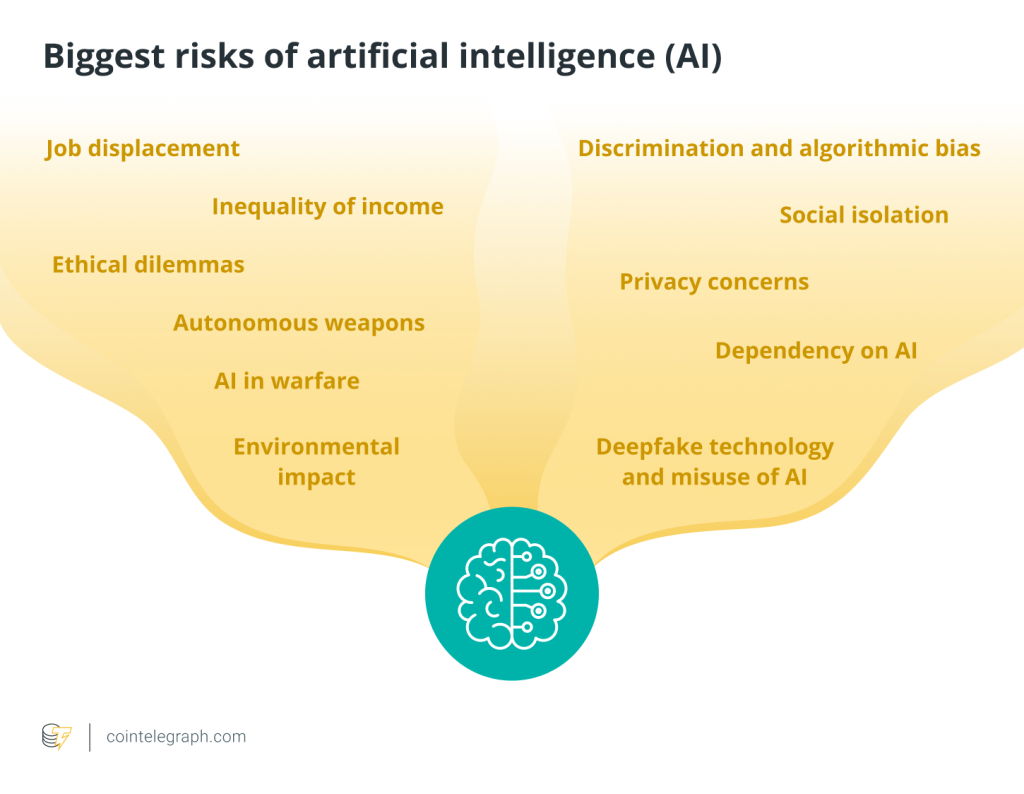

The EU AI Act places machine learning models into four categories based on the risk they pose to society, with high-risk models subject to the most restrictive rules.

According to the EU’s website “unacceptable risk” is the top category that bans “all AI systems considered a clear threat to the safety, livelihoods and rights of people will be banned, from social scoring by governments to toys using voice assistance that encourages dangerous behavior.”

Related: Elon Musk makes Grok AI open source amid ongoing OpenAI lawsuit

An example of this would include the use of AI-powered remote biometric identification systems to scan faces in public by local authorities.

“High-risk” applications include critical infrastructures, educational or vocational training, safety components of products, essential private and public services, law enforcement that may interfere with people’s fundamental rights, migration and border control management and administration of justice and democratic processes.

“Limited risk” is related to the level of transparency in AI usage. It gives the example of interacting with AI chatbots and the need for users to be aware of interacting with a machine, along with ensuring that AI-generated content is identifiable.

The EU has created a tool called “The EU AI Act Compliance Checker,” which allows organizations to see where they fall within the legislation.

The EU’s AI Act makes space for the “free use” of “minimal-risk” AI, which includes applications such as AI-enabled video games or spam filters.

According to the EU, currently the “vast majority”of AI systems used in the EU are in this category.

AI chatbots

Additionally, provisions for generative-AI models were added by lawmakers with the explosion in popularity and accessibility of AI chatbots such as ChatGPT, Grok and Gemini.

General-purpose AI model developers, including local EU startups and some of the bigger names previously mentioned, will need to hand over detailed summaries of the training data used to train such systems and stay compliant with EU copyright law.

Deepfake content that has been generated using AI must also be labeled in accordance with the law as artificially manipulated.

Response from tech companies

Previously, the EU’s AI Act received pushback from local businesses and tech companies who urged authorities not to overregulate emerging AI technologies at the cost of innovation.

In June 2023, executives from 160 companies in the tech industry drafted an open letter to EU regulators outlining the implications on local innovation if regulations are too-strict.

However, upon approving the world’s first comprehensive set of AI legislation, the EU Parliament received praise from the tech giant IBM in a statement from Christina Montgomery, its vice president and chief privacy and trust officer. She said:

“I commend the EU for its leadership in passing comprehensive, smart AI legislation. The risk-based approach aligns with IBM’s commitment to ethical AI practices and will contribute to building open and trustworthy AI ecosystems.”

Responses