OpenAI accuses New York Times of hacking AI models in copyright lawsuit

The “hacking” OpenAI mentions in the filing could also be called prompt engineering or “red-teaming,” according to The New York Times’ attorney.

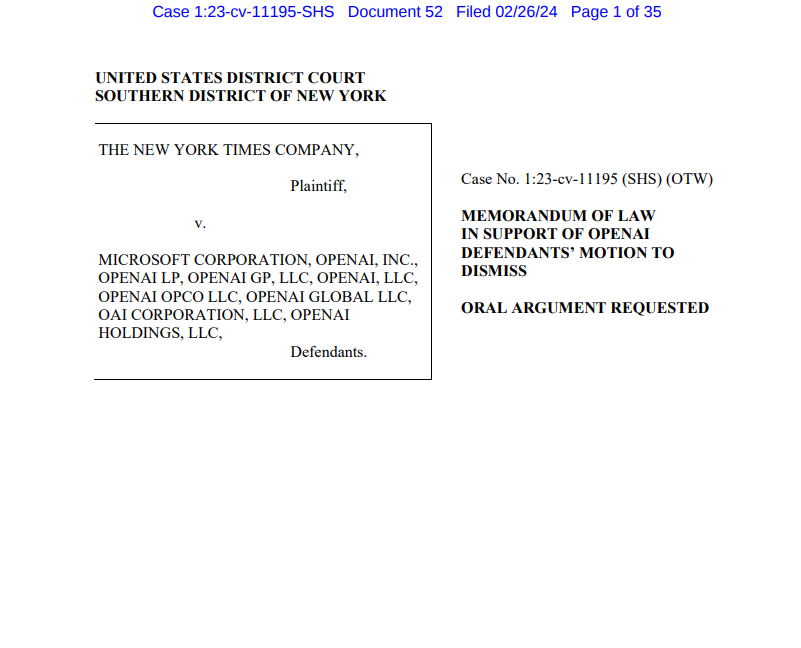

OpenAI has asked a federal judge to dismiss parts of The New York Times’ copyright lawsuit against it, arguing that the newspaper “paid someone to hack ChatGPT” and other artificial intelligence (AI) systems to generate 100 misleading evidence for the case.

In a Manhattan federal court filing on Monday, OpenAI stated that the Times caused the technology to reproduce its material through “deceptive prompts that violate OpenAI’s terms of use.” OpenAI didn’t identify the individual it claims the Times employed to manipulate its systems, avoiding accusations of the newspaper violating anti-hacking laws.

In the filing, OpenAI said:

“The allegations in the Times’s complaint do not meet its famously rigorous journalistic standards. The truth, which will come out in this case, is that the Times paid someone to hack OpenAI’s products.”

OpenAI’s claim of “hacking” is, according to the newspaper’s attorney Ian Crosby, merely an effort to use OpenAI’s products to find evidence of the alleged theft and reproduction of The Times’s copyrighted work.

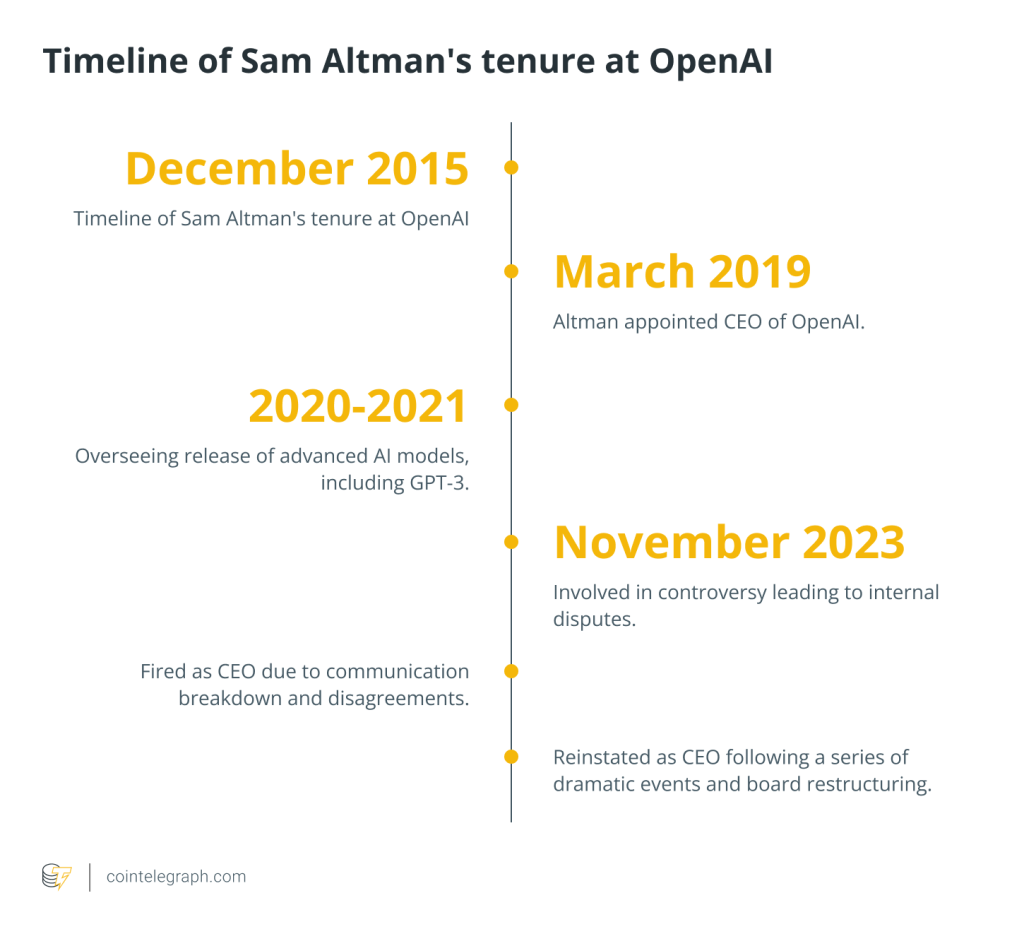

In December 2023, The Times filed a lawsuit against OpenAI and its leading financial supporter, Microsoft. The lawsuit alleges the unauthorized use of millions of Times articles to train chatbots that provide information to users.

The lawsuit pulled from both the United States Constitution and the Copyright Act to defend the original journalism of the NYT. It also pointed to Microsoft’s Bing AI, alleging that it creates verbatim excerpts from its content.

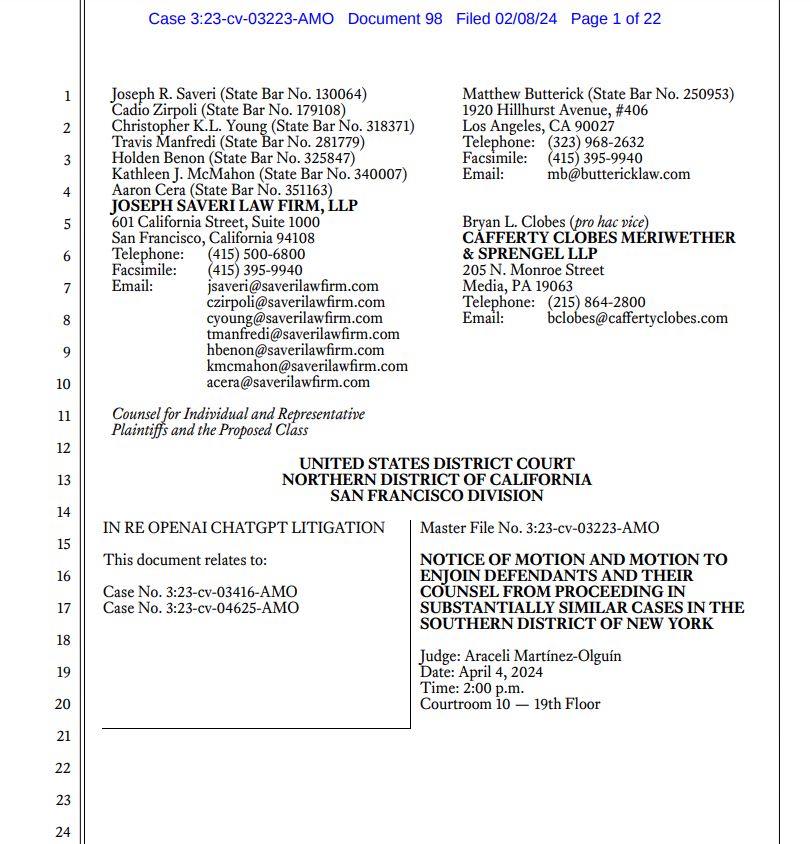

The Times is among many copyright holders suing tech firms for supposedly misusing their content in AI training. Other groups, like authors, visual artists, and music publishers, have also filed similar lawsuits.

Related: Elizabeth Warren wants ‘level playing field’ for crypto and Big Tech AI blocks

OpenAI has previously asserted that training advanced AI models without utilizing copyrighted works is “impossible.” In a filing to the U.K. House of Lords, OpenAI stated that as copyright covers a wide range of human expressions, training leading AI models would be infeasible without incorporating copyrighted materials.

Tech firms argue that their AI systems use copyrighted material fairly, emphasizing that these lawsuits threaten the potential multitrillion-dollar industry’s growth.

Courts have yet to determine if AI training is considered fair use in copyright law. However, some infringement claims related to generative AI system outputs were dismissed because there was insufficient evidence to show the AI-created content resembled copyrighted works.

Responses