Groq AI model goes viral and rivals ChatGPT, challenges Elon Musk’s Grok

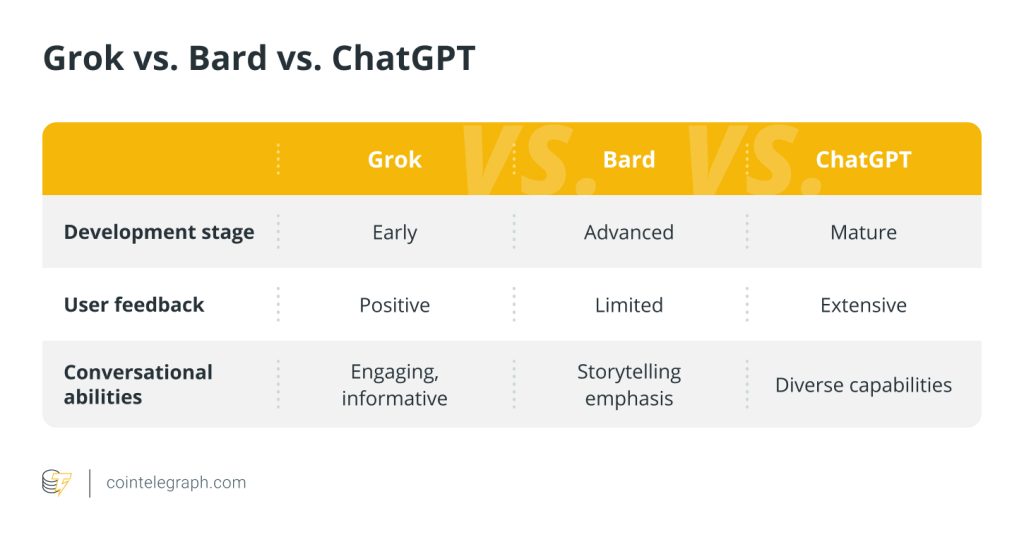

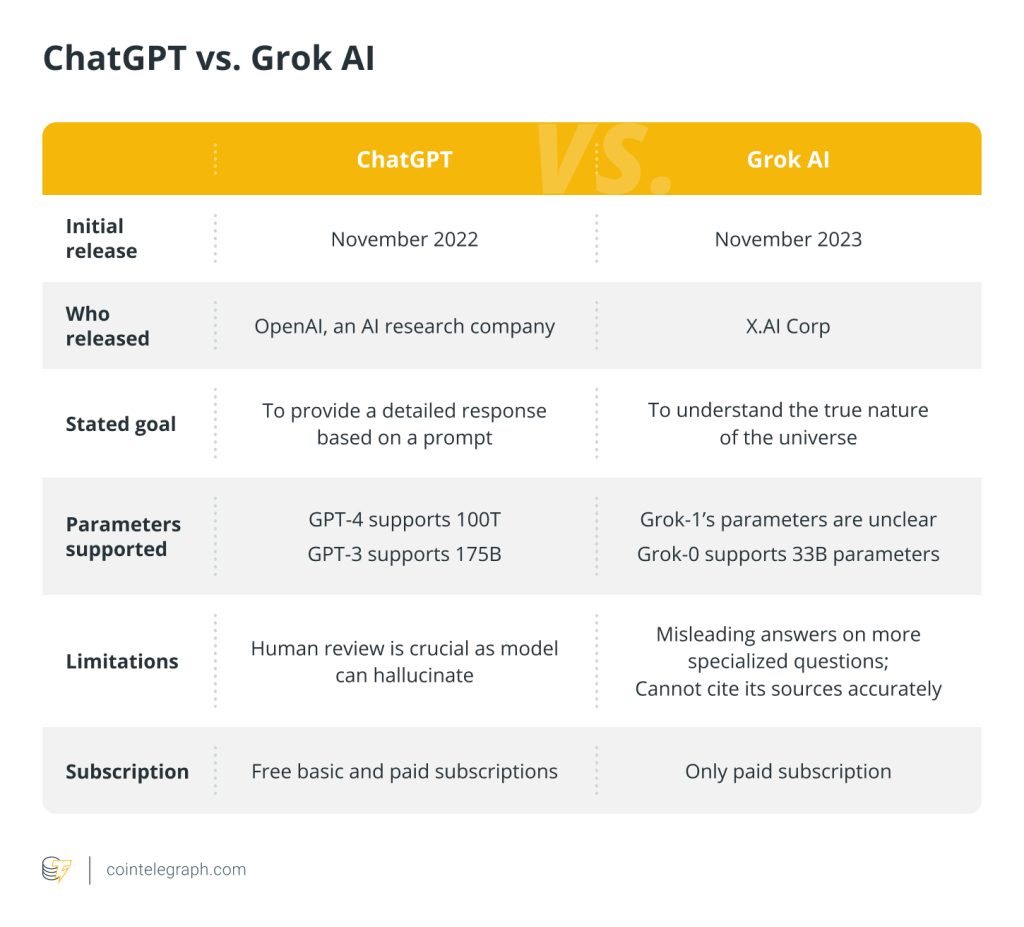

The Groq AI model is gaining widespread attention on social media, challenging ChatGPT’s dominance and drawing comparisons to Elon Musk’s own model, the similarly named Grok.

Groq, the latest artificial intelligence (AI) tool to come onto the scene, is taking social media by storm with its response speed and new technology that may dispense with the need for GPUs.

Groq became an overnight sensation after its public benchmark tests went viral on the social media platform X, revealing its computation and response speed to outperform popular AI chatbot ChatGPT.

The first public demo using Groq: a lightning-fast AI Answers Engine.

It writes factual, cited answers with hundreds of words in less than a second.

More than 3/4 of the time is spent searching, not generating!

The LLM runs in a fraction of a second.https://t.co/dVUPyh3XGV https://t.co/mNV78XkoVB pic.twitter.com/QaDXixgSzp

— Matt Shumer (@mattshumer_) February 19, 2024

This is due to the team behind Groq developing its own custom application-specific integrated circuit (ASIC) chip for large language models (LLMs), allowing it to generate roughly 500 tokens per second. In comparison, ChatGPT-3.5, the publicly available version of the model, can generate around 40 tokens per second.

Groq Inc, the developer of this model, claims to have created the first language processing unit (LPU) through which it runs its model, rather than the scarce and costly graphics processing units (GPUs) typically used to run AI models.

Wow, that's a lot of tweets tonight! FAQs responses.

• We're faster because we designed our chip & systems

• It's an LPU, Language Processing Unit (not a GPU)

• We use open-source models, but we don't train them

• We are increasing access capacity weekly, stay tuned pic.twitter.com/nFlFXETKUP— Groq Inc (@GroqInc) February 19, 2024

However, the company behind Groq is not new. It was founded in 2016, when it trademarked the name Groq. Last November, when Elon Musk’s own AI model, also called Grok — but spelled with a “k” — was gaining traction, the developers behind the original Groq published a blog post calling out Musk for the choice of name:

“We can see why you might want to adopt our name. You like fast things (rockets, hyperloops, one-letter company names) and our product, the Groq LPU Inference Engine, is the fastest way to run large language models (LLMs) and other generative AI applications. However, we must ask you to please choose another name, and fast.”

Since Groq went viral on social media, neither Musk nor the Grok page on X has made any comment on the similarity between the names of the two tools.

Related: Microsoft to invest 3 billion euros into AI development in Germany

Nonetheless, many users on the platform have begun to make comparisons between the LPU model and other popular GPU-based models.

One user who works in AI development called Groq a “game changer” for products that require low latency, which refers to the time it takes to process a request and garner a response.

side by side Groq vs. GPT-3.5, completely different user experience, a game changer for products that require low latency pic.twitter.com/sADBrMKXqm

— Dina Yerlan (@dina_yrl) February 19, 2024

Another user posted that Groq’s LPUs could offer a “massive improvement” to GPUs when it comes to servicing the needs of AI applications in the future and said it might also turn out to be a good alternative to the “high performing hardware” of the in-demand A100 and H100 chips produced by Nvidia.

This comes at a time in the industry when major AI developers are seeking to develop in-house chips so as not to rely on Nvidia’s models alone.

OpenAI is reportedly seeking trillions of dollars in funding from governments and investors worldwide to develop its own chip to overcome problems with scaling its products.

Magazine: ChatGPT trigger happy with nukes, SEGA’s 80s AI, TAO up 90%: AI Eye

Responses