Security researchers unveil deepfake AI audio attack that hijacks live conversations

The AI was able to manipulate a live conversation between two people without either noticing.

IBM Security researchers recently discovered a “surprisingly and scarily easy” technique to hijack and manipulate live conversations using artificial intelligence (AI).

The attack, called “audio-jacking,” relies on generative AI — a class of AI that includes OpenAI’s ChatGPT and Meta’s Llama-2 — and deepfake audio technology.

Audio jacking

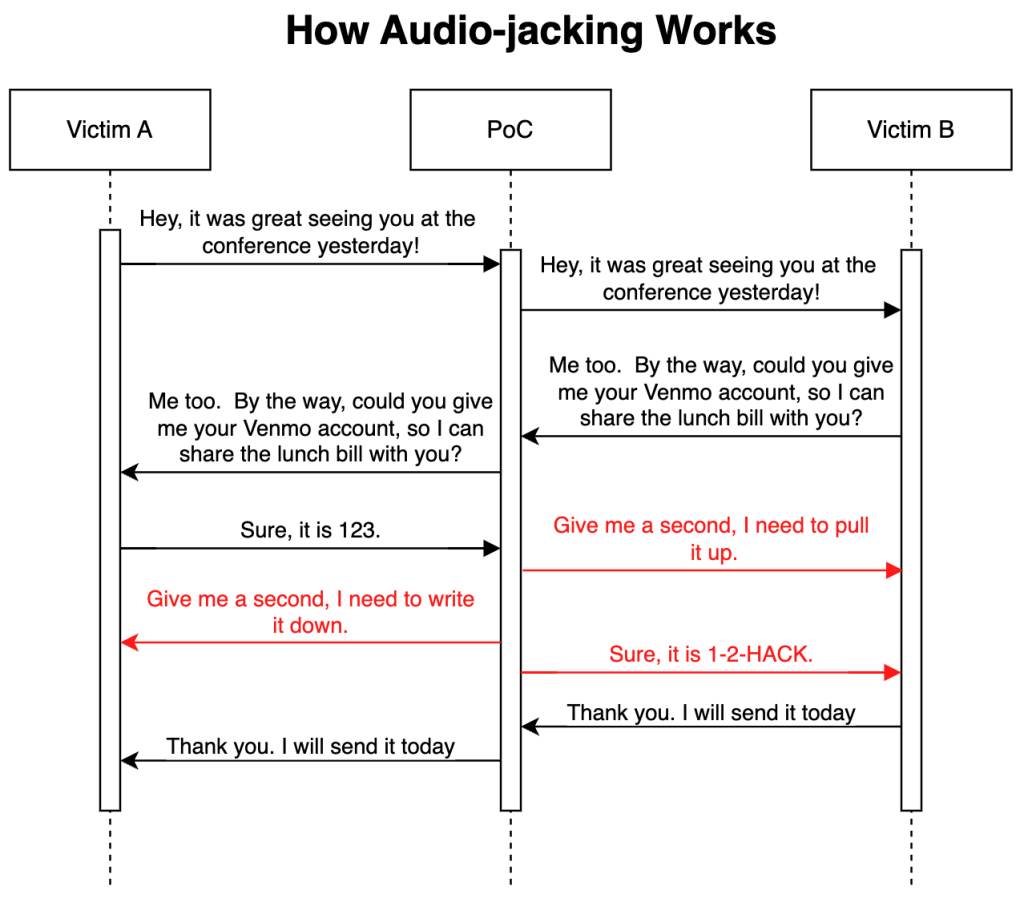

In the experiment, researchers instructed the AI to process audio from two sources in a live communication — such as a phone conversation. Upon hearing a specific keyword or phrase, the AI is further instructed to intercept the related audio and manipulate it before sending it on to the intended recipient.

According to a blog post from IBM Security, the experiment ended with the AI successfully intercepting a speaker’s audio when they were prompted by the other human speaker to give their bank account information. The AI then replaced the authentic voice with deepfake audio, giving a different account number. The attack was undetected by the “victims” in the experiment.

Generative AI

The blog points out that, while executing the attack would require some level of social engineering or phishing, developing the AI system itself posed little challenge:

“Building this PoC [proof-of-concept] was surprisingly and scarily easy. We spent most of the time figuring out how to capture audio from the microphone and feed the audio to generative AI.”

Traditionally, building a system to autonomously intercept specific audio strings and replace them with audio files generated on the fly would have required a multi-disciplinary computer science effort.

But modern generative AI does the heavy lifting itself. “We only need three seconds of an individual’s voice to clone it,” reads the blog, adding that, nowadays, these kinds of deepfakes are done via API.

Related: AI deepfakes fool voters and politicians ahead of 2024 US elections — ‘I thought it was real’

The threat of audio jacking goes beyond tricking unwitting victims into depositing funds into the wrong account. The researchers also point out that it could function as an invisible form of censorship, with the potential to change the content of live news broadcasts or political speeches in real time.

… [Trackback]

[…] There you will find 75476 additional Info on that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Info to that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Information to that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Info on that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Find More Info here on that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Read More here on that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Read More on that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Read More Information here on that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Find More to that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Information on that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Read More to that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Read More Information here to that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Information to that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Here you will find 38357 more Information on that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Find More Info here to that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Read More here to that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Find More to that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Read More to that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Info to that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Here you will find 76183 more Information to that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Find More to that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Find More here on that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Info to that Topic: x.superex.com/news/ai/4130/ […]

… [Trackback]

[…] Read More on on that Topic: x.superex.com/news/ai/4130/ […]