California senator slams OpenAI’s opposition to AI safety bill

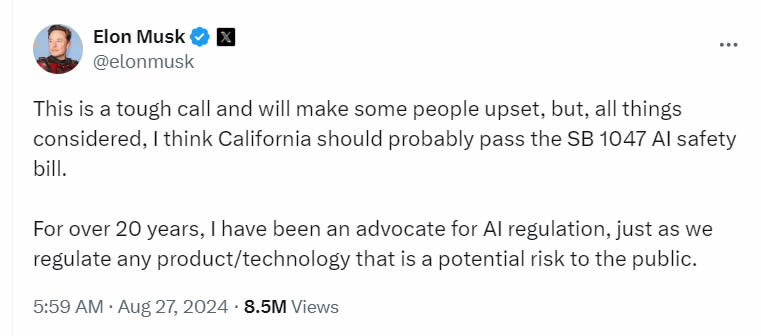

As the debate over SB 1047 intensifies, Wiener remains steadfast in his support for the bill, which he believes is a necessary step toward responsible AI governance.

California State Senator Scott Wiener has publicly criticized OpenAI’s opposition to Senate Bill 1047 (SB 1047), which aims to regulate artificial intelligence technologies.

Wiener introduced the bill in February, which would require AI companies to conduct rigorous safety evaluations of their models before releasing them to the public.

Despite OpenAI’s high-profile objection, Wiener argued that the company’s concerns are unfounded and that the bill is essential for safeguarding both public safety and national security.

OpenAI’s opposition and Wiener’s response

ChatGPT developer OpenAI has expressed its opposition to SB 1047 in a letter addressed to Wiener and California Governor Gavin Newsom.

OpenAI’s chief strategy officer Jason Kwon warned in the letter that the bill could stifle innovation and drive talent out of California, a state that has long been a global leader in the tech industry, according to Bloomberg.

Source: Scott Wiener

Kwon argued that the AI sector is still in its early stages and that overly restrictive state regulations could hinder its growth. He suggested that federal legislation, rather than state laws, would be more appropriate for governing AI development.

Wiener, however, dismissed these concerns, calling them “tired” and baseless. In a press release issued on Wednesday, Aug. 21, he pointed out that OpenAI’s letter did not criticize any specific provisions of the bill.

Related: OpenAI offers GPT-4o fine-tuning for companies in AI service shift

He argued that the company’s objections were rooted in a generalized fear of regulation rather than any substantive issues with the bill itself. “OpenAI’s claim that companies will leave California because of SB 1047 makes no sense given that the bill is not limited to companies headquartered in California,” Wiener said.

The core of SB 1047

SB 1047 mandates that AI companies perform comprehensive safety evaluations on their models to identify potential risks before they are released. It also grants the authority to shut down models that pose significant risks.

According to Wiener, these provisions are not only reasonable but also essential for protecting the public from the unforeseen dangers that advanced AI systems could pose. He emphasized that OpenAI has previously committed to conducting such safety evaluations, making the company’s opposition to the bill even more perplexing.

Wiener further highlighted that, despite OpenAI’s insistence on federal regulation, Congress has yet to take meaningful action on AI safety. He drew parallels to California’s data privacy law, which was passed in the absence of federal legislation and has since become a model for other states.

In July, OpenAI expressed support for three Senate bills focused on the safety and accessibility of artificial intelligence. The endorsed bills — the Future of AI Innovation Act, the CREATE AI Act and the NSF AI Education Act — each address distinct aspects of AI.

Magazine: AI Eye: Is AI a nuke-level threat? Why AI fields all advance at once, dumb pic puns

Responses