Regulators are misguided in efforts to restrict open-source AI

Lawmakers in Europe and California are worried about that open-source AI is "dangerous." On the contrary — there is nothing dangerous about transparency.

Artificial intelligence (AI) policy debates include many contentious issues, including one that has existed throughout the history of computing: the battle between open and closed-source systems. Today, this fault line has opened again, with lawmakers in California and Europe attempting to restrict “open-weights AI models.”

Open-weights models, like open source software before them, are publicly available systems that allow their underlying code to be inspected and modified by various parties for varied purposes. Some critics argue open-sourcing algorithmic models or systems is “uniquely dangerous” and should be restricted. However, arbitrary regulatory limitations on open-source AI systems would have serious downsides by limiting innovation, competition, and transparency.

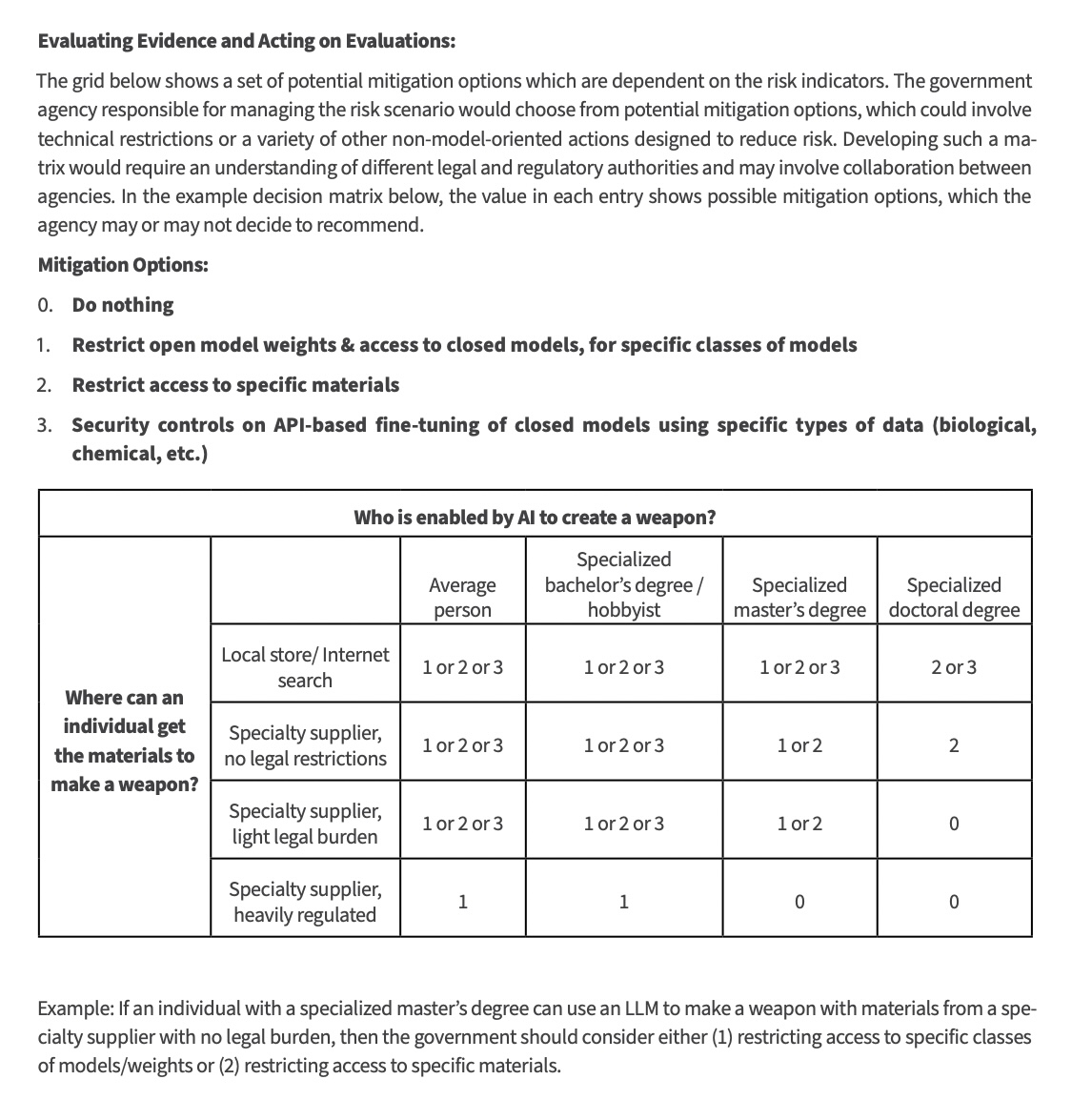

This issue took on new relevance recently following important announcements from government and industry. First, on July 30, the Commerce Department issued a major report on such models, which was required by the AI executive order that President Joe Biden signed in October. The final report is mostly very welcoming of open-weight AI systems and “outlines a cautious yet optimistic path” for them. The report concludes that, “there is not sufficient evidence on the marginal risks of dual-use foundation models with widely available model weights to conclude that restrictions on model weights are currently appropriate, nor that restrictions will never be appropriate in the future.”

Related: Only Congress and DARPA can rein in the dangers of AI

This report comes on the heels of a Federal Trade Commission statement on open-weights models saying they “have the potential to drive innovation, reduce costs, increase consumer choice, and generally benefit the public.” These positive statements from the Biden administration have also been echoed by J.D. Vance, the Republican pick for vice president who has voiced support for open-source AI as a means of countering Big Tech. This suggests bipartisan support exists for open-source AI.

The other major development was Meta’s recent release of the latest and most powerful version yet of its “Llama 3.1” frontier AI model. Mark Zuckerberg, Meta’s Founder and CEO, announced the release with an essay on how “Open Source AI Is the Path Forward.” While Llama is not a perfectly open source system — Meta still controls the underlying source code — the model allows additional application development on top. This facilitates more innovation and competition in AI.

But even if open-source AI developers seem to be getting a green light from federal officials, other state and international regulators could limit their potential.

It’s not clear that large-scale open-weights AI models will even be legal under Europe’s top-down and highly restrictive approach to tech regulation. Meta has already announced that it will not release its next multimodal AI model in the European Union “due to the unpredictable nature of the European regulatory environment.” This follows a similar late June announcement by Apple that it will not be rolling out state-of-the-art AI features in Europe “due to the regulatory uncertainties” associated with EU tech regulations.

This is why America must avoid the sort of rushed regulatory morass the EU has created for AI innovators. European over-regulation has already decimated digital investment and business formation, leaving the continent largely devoid of any major tech players that compete globally.

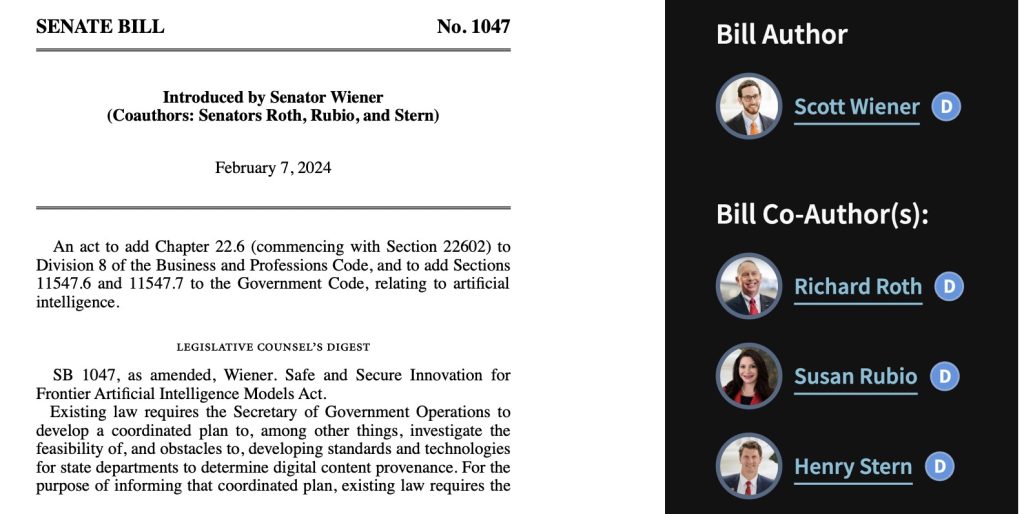

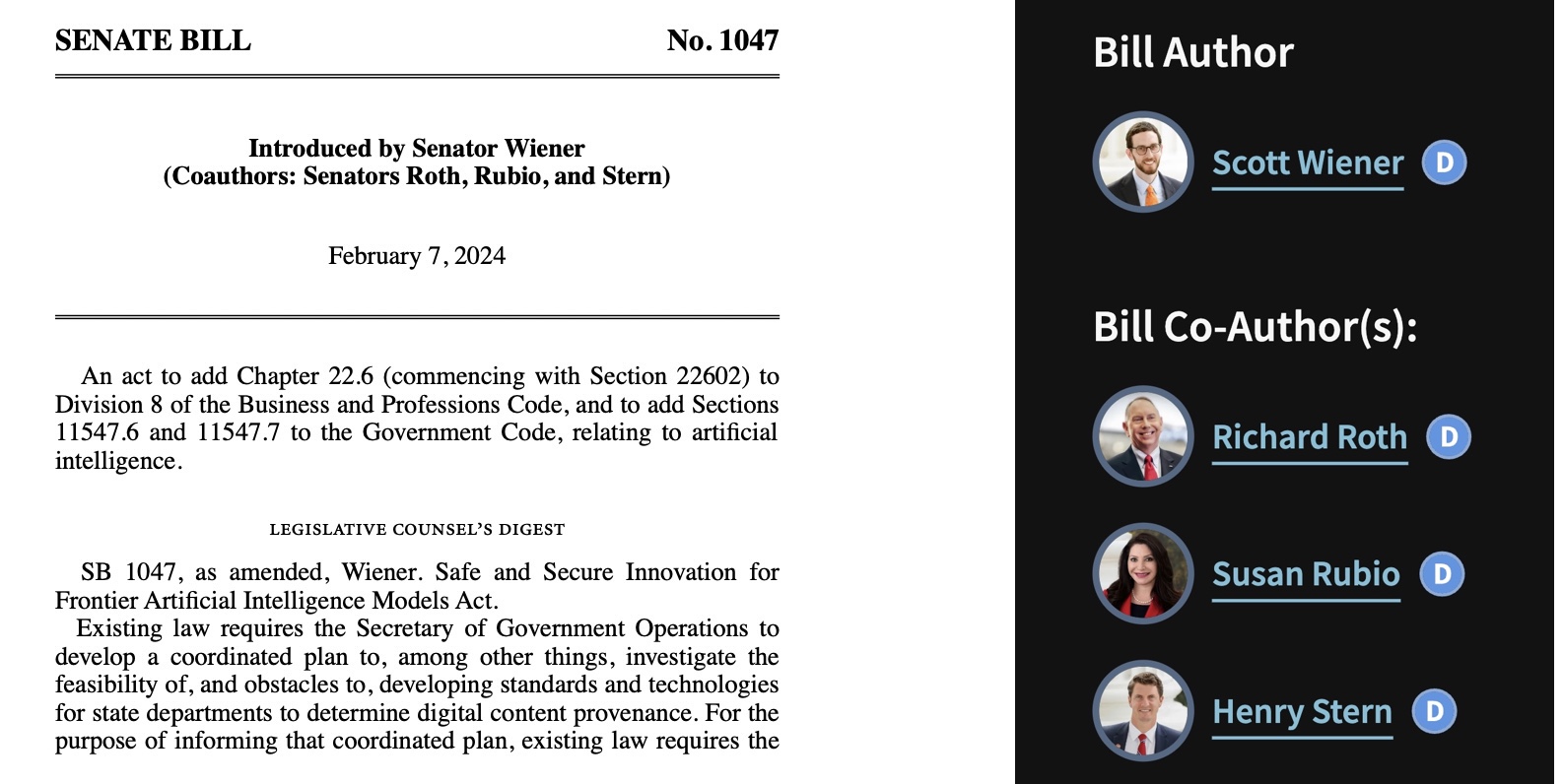

Unfortunately, such innovation-crushing mandates might be on the way at the state level in the United States. Democratic legislators in California have proposed SB 1047, which would create a “Frontier Model Division” to enforce a variety of new AI regulations and penalties. The law would demand that cutting-edge developers provide “reasonable assurance” that “model derivatives do not pose an unreasonable risk of causing or enabling a critical harm.” That is an impossible standard for open source providers to satisfy, leading technology experts to warn the bill will “seriously limit open-weight AI models” and “will stifle open-source AI and decrease safety.”

That may seem counterintuitive because proponents of the California law describe it as an AI safety measure. However, if the bill blocks state of the art AI research and undermines innovation, that would limit important safety-enhancing systems and applications, too. Worse yet, that outcome would undermine safety in a second way by acting as a gift to China, which is aggressively pushing to achieve its stated goal to overtake America and become the world’s leader in AI by 2030.

China is closing the gap fast with the U.S. on AI innovation. Needless to say, the Chinese government will not worry about complying with whatever California bureaucrats demand. They will just plow ahead. As Meta’s Zuckerberg correctly noted in a July post on Facebook, “constraining American innovation to closed development increases the chance that we don’t lead at all,” which is why “our best strategy is to build a robust open ecosystem.”

OpenAI’s Sam Altman made a similar point in a July editorial in which he advocated working with other democratic nations to counter authoritarian regimes like China and Russia. “Making sure open-sourced models are readily available to developers in those nations will further bolster our advantage,” Altman wrote. “The challenge of who will lead on AI is not just about exporting technology, it’s about exporting the values that the technology upholds.”

Indeed, a strong and diverse AI technology base not only strengthens our economy and provides better applications and jobs, but it also bolsters our national security and allows our values of pluralism, personal liberty, individual rights, and free speech to shape global information technology markets. Open source AI will play a crucial role in facilitating that objective — so long as policymakers let it.

Adam Thierer is a resident senior fellow for the Technology and Innovation Program at the R Street Institute. He served previously as a senior fellow at the Heritage Foundation and as director of telecommunications studies at the Cato Institute. He holds a holds a master’s degree in international business management from the University of Maryland and an undergraduate degree from Indiana University Bloomington.

This article is for general information purposes and is not intended to be and should not be taken as legal or investment advice. The views, thoughts, and opinions expressed here are the author’s alone and do not necessarily reflect or represent the views and opinions of Cointelegraph.

Responses