OpenAI backs Senate bills to advance AI safety and accessibility

OpenAI’s support for these bills highlights a broader vision for AI that balances safety, accessibility, and the potential for educational progress.

OpenAI has expressed support for three Senate bills focused on the safety and accessibility of artificial intelligence.

The endorsed bills, including the Future of AI Innovation Act, the CREATE AI Act, and the NSF AI Education Act, each address distinct aspects of AI. Together, they reflect a concerted effort to guide the development of AI in a way that is both responsible and inclusive.

The bills

According to a LinkedIn post by OpenAI’s Vice President of Global Affairs, Anna Makanju, central to OpenAI’s endorsement is the Future of AI Innovation Act. This legislation aims to solidify Congressional support for the US AI Safety Institute, a body dedicated to developing best practices for the safe deployment of frontier AI systems.

However, the CREATE AI Act formalizes the creation of an initiative to democratize access to AI research resources. This democratization is crucial for fostering innovation and ensuring that the benefits of AI advancements are widely distributed.

In addition to supporting the development and democratization of AI, OpenAI is backing the NSF AI Education Act. This bill focuses on strengthening the AI workforce and enhancing educational opportunities related to AI tools.

Push for AI regulation

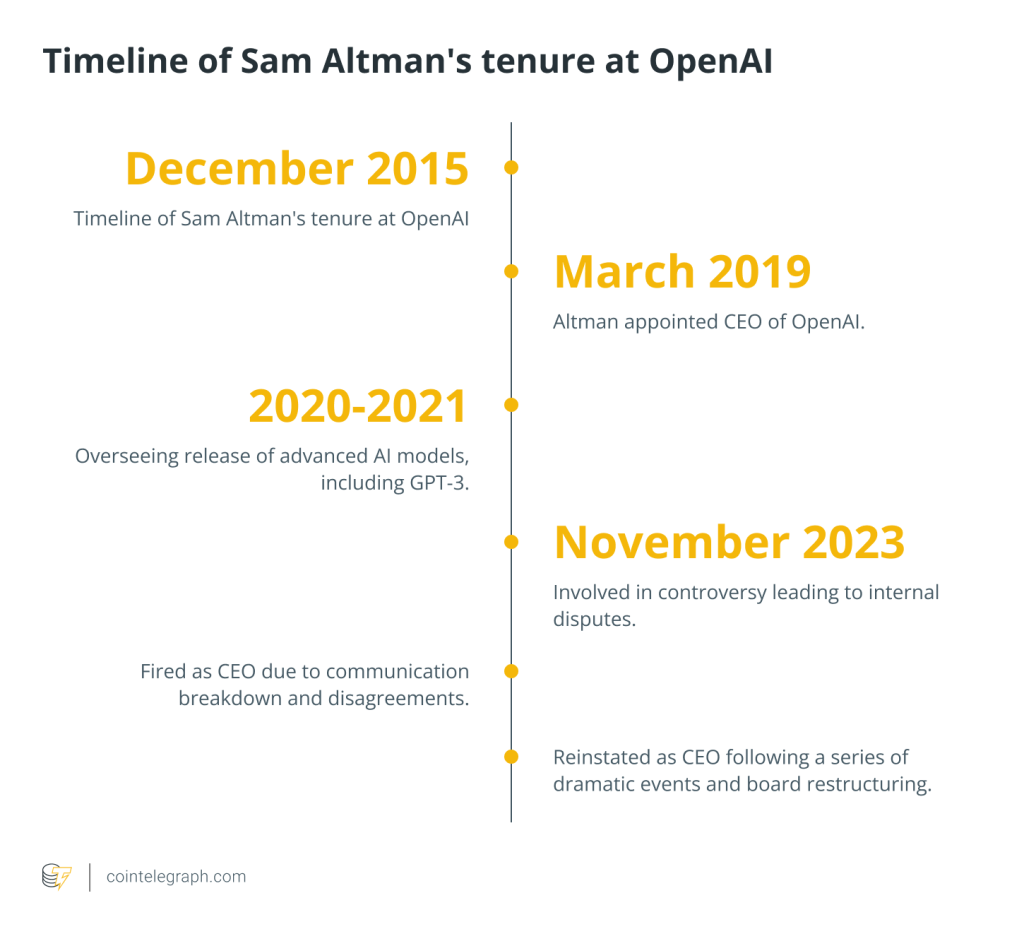

OpenAI’s support for the legislation follows a letter addressed to OpenAI’s CEO, Sam Altman, by a group of Senate Democrats, joined by an independent lawmaker, seeking clarification on the company’s protocols for ensuring safety and its treatment of whistleblowers within the organization.

Related: US AI safety consortium revealed with the biggest names in tech

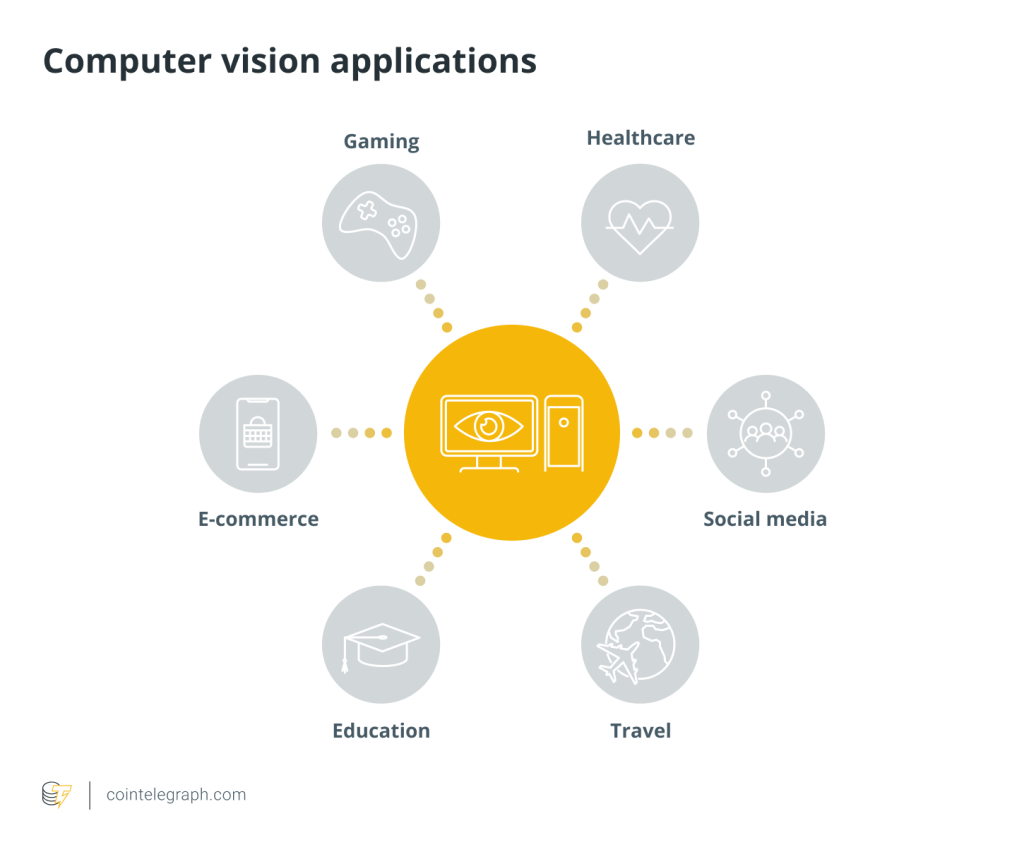

As artificial intelligence continues to evolve, policymakers worldwide are increasingly exploring the need for regulatory frameworks to govern its development and deployment.

In a recent warning, UK officials highlighted the need for robust regulatory frameworks to govern AI development, likening it to the rigorous standards applied to medicine and nuclear power.

Simultaneously, another British official issued a stark warning, urging swift action to prevent uncontrolled AI growth from posing an existential threat within the next two years.

As the EU’s Artificial Intelligence Act nears completion, European lawmakers are taking a proactive stance on AI regulation. Regulators are now considering a measure that would require explicit labeling of content produced by AI systems.

Responses