Only Congress and DARPA can rein in the dangers of AI

AI is stretching the boundaries of existing internet protocols. A working group between DARPA, NIST and other federal agencies could help.

Some people claim that the United States federal government “invented the internet.” While that‘s not quite true, many of the fundamental protocols that define the internet — HTTP, TCP/IP, SMTP, DNS, and others — were the product of deep collaboration between government and academic researchers. Part of their power lies in their openness, which facilitated broad interoperability and a level playing field for everyone from individuals to large corporations to communicate online. It’s also partly why no one made billions off the introduction of HTTP — the fact that no one owned these protocols is what made them such a powerful tool for global communication and commerce.

That leads us to the next “epoch-defining” technology: AI. Revolutionary though it is, generative AI comes to us via these same protocols. In part, that’s a testament to their scalability and forward-thinking design. Yet as AI capabilities advance, it seems likely to stretch the bounds of our existing internet protocols.

To fully harness the power of AI — and to maintain some semblance of order during a tumultuous technological revolution — we’ll need new protocols. And just like with the internet, the U.S. federal government, working in collaboration with academia and the private sector, is well-positioned to bring these into existence.

Related: Blockchain has a role to play in countering the ill effects of AI

While new protocols will be built on a wide variety of technologies, innovations pioneered by the crypto industry, such as zero-knowledge proofs, cryptocurrencies, smart contracts, and blockchains, could all play a crucial role.

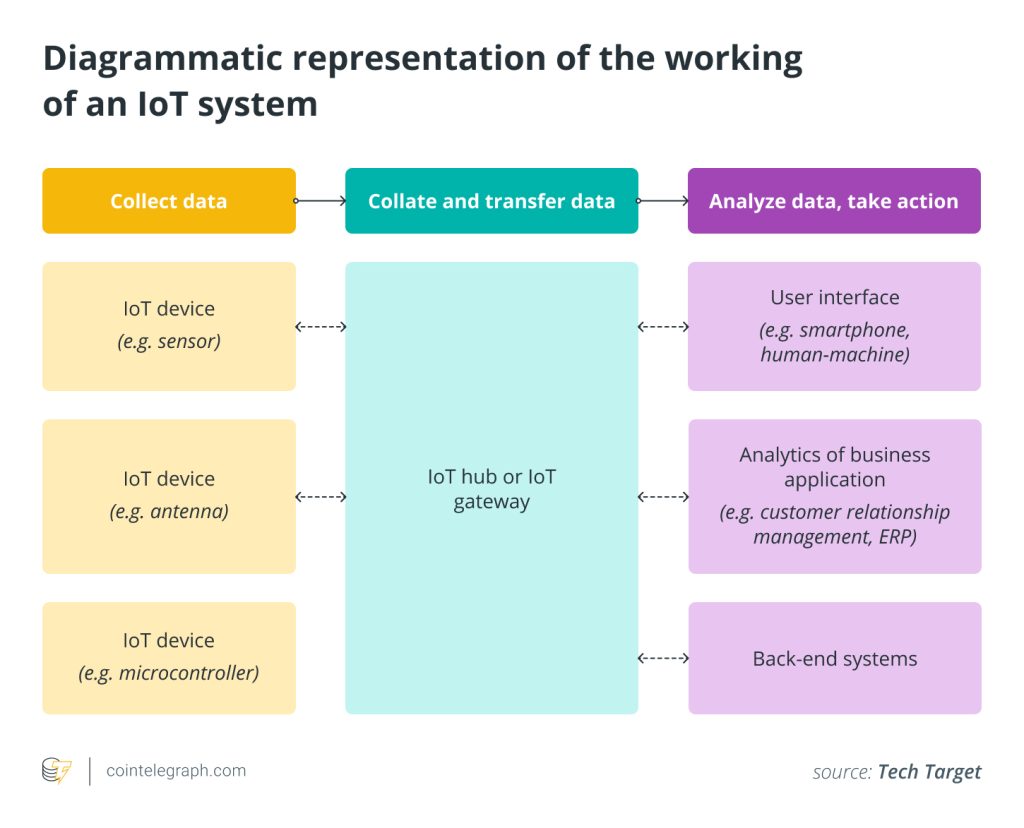

Currently, AI capabilities do not, by and large, require us to reimagine the architecture of the internet, but it’s not difficult to see how that might change. One of the next major milestones, according to AI leaders like OpenAI and DeepMind, will be agents: AI systems that can take action on behalf of a user.

For a long time, we’ve been working towards a universal AI agent that can be truly helpful in everyday life. Today at #GoogleIO we showed off our latest progress towards this: Project Astra. Here’s a video of our prototype, captured in real time. pic.twitter.com/TSGDJZVslg

— Demis Hassabis (@demishassabis) May 14, 2024

Say I want to hire a plumber. Today, I would use a search engine to find plumbers in my area and reach out to whichever ones the search engine surfaces to the top of the results. In the near future, however, I might ask my AI agent assistant to query all the plumbers in my area, reach out to them to get a quote for my job, and give the job to the lowest bidder.

Because an AI agent could easily contact, say, 50 plumbers in the time it takes a human to contact just one, a side effect may be that plumbers have many more inquiries to field. This may in turn require them to get their own AI agents to negotiate with new potential customers. Thus, quite quickly, something that seems bizarre today could become commonplace: AI-to-AI communication. And, of course, it would not be limited to plumbers; agents could well enter any sphere of economic activity.

Such communication presents challenges. First, given the unpredictability and unreliability of AI systems — which could persist even as capabilities advance — do we want AI agents to communicate using the same protocols humans use, such as email, WhatsApp, SMS, or iMessage? Or might we want a protocol purpose-built for the task?

Related: Ignoring quantum threats in CBDC design is reckless

Imagine a protocol that could robustly tie an agent to the person who dispatched it, helping to combat AI-assisted fraud. Or imagine a communication protocol whose records were indelibly preserved, so that it’s possible to resolve ambiguities — say, a dispute with my plumber over the scope of work.

A bit further into the future, one can imagine agents doing more than communicating. Perhaps they will also one day make financial transactions with one another on behalf of human users. Are we going to give these agents access to our bank accounts and credit cards? Are we going to change our laws so that agents can have their own bank accounts and credit cards? No matter how advanced the technology, this seems unwise.

Instead, just like with communication, new protocols purpose-built for agent-to-agent financial transactions would seem a more secure and reliable path. Beyond the protocols themselves, allowing these agents to transact in cryptocurrencies, such as dollar-backed stablecoins, would be a logical and user-friendly way to avoid many of the complications of the traditional financial system.

These are just a few examples among many. The point is that rather than forcing AI agents into our current, built-for-humans digital infrastructure, it would be much more logical and safer to create new protocols specifically for AI. This will allow us to take advantage of the unique new capabilities enabled by AI while mitigating some of the foreseeable risks.

Doing this will require an urgent and wide-ranging effort by the federal government, the AI and crypto industries, and academic researchers. To kickstart this, Congress should work with the next presidential administration to create a working group comprised of representatives from the private sector and government agencies like the National Institute of Standards and Technologies (NIST), the Defense Advanced Research Projects Agency (DARPA), and others.

It’s fortunate that AI is rising just as many of our political leaders seem to be reconsidering their stances toward crypto and blockchain technologies. These innovations could play an essential role in making the AI transformation go well. But AI progresses quickly, which means our societal adaptations must do so as well. The time to act is now.

Dean W. Ball is a research fellow with the Mercatus Center at George Mason University. He was previously senior program manager for the Hoover Institution’s State and Local Governance Initiative, and was the executive director of the Calvin Coolidge Presidential Foundation. He serves on the board of directors of the Alexander Hamilton Institute and on the advisory council of the Krach Institute for Tech Diplomacy at Purdue University. He graduated magna cum laude from Hamilton College in 2014.

This article is for general information purposes and is not intended to be and should not be taken as legal or investment advice. The views, thoughts, and opinions expressed here are the author’s alone and do not necessarily reflect or represent the views and opinions of Cointelegraph.

Responses