An overview of graph neural networks (GNNs), types and applications

Graph neural networks (GNNs) have emerged as a powerful framework for analyzing and learning from structured data represented as graphs. GNNs operate directly on graphs, as opposed to conventional neural networks that are created for grid-like input, and they capture the dependencies and relationships between connecting nodes. GNNs are an important area of research due to their adaptability and potential, and they show significant promise for increasing graph-based learning and analysis.

What are graph neural networks?

Graph neural networks are a type of neural network that is designed to process graph-structured data. Graphs are mathematical constructs that are used to represent objects and their relationships, where nodes represent objects, and edges represent relationships between them.

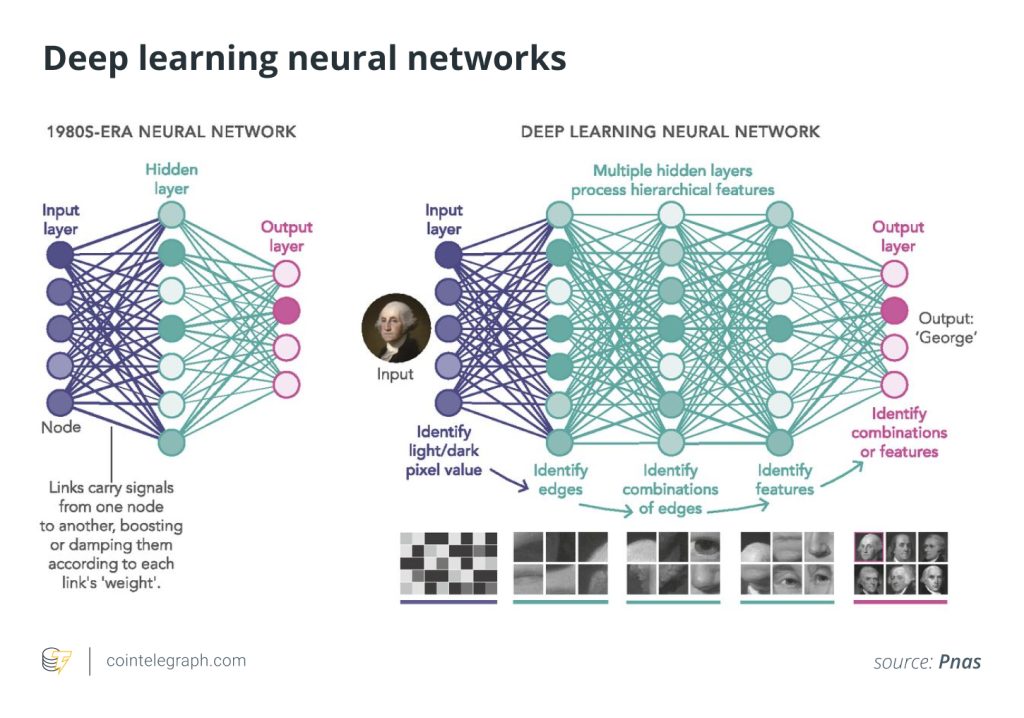

Graphs are used to model complex systems, and GNNs provide a way to analyze and make predictions based on the structure of the graph. GNNs are a type of deep-learning algorithm that can operate on graphs with many layers.

At their core, GNNs use a series of graph convolutional layers to process the graph data. These convolutional layers work similarly to the convolutional layers used in traditional convolutional neural networks (CNNs) but with some important differences.

In GNNs, each convolutional layer is designed to aggregate information from neighboring nodes and edges using a message-passing scheme. This allows GNNs to capture local and global patterns within the graph, which can be used to make predictions about the graph as a whole.

GNNs are capable of learning node and graph-level representations that are useful for a wide range of tasks. For example, GNNs can be used for node classification tasks, in which the goal is to assign a label to each node in the graph based on its features and the features of its neighbors.

GNNs can also be used for graph classification tasks, in which the goal is to predict the properties of the entire graph based on its structure and features. Additionally, GNNs can be used for link prediction tasks, in which the goal is to predict whether two nodes in the graph are connected by an edge.

The architecture of graph neural networks

GNNs are designed to work with graph-structured data, which consists of nodes representing entities and edges representing relationships between those entities. Each node in the graph has a feature vector representing its attributes. These features can be of different types, such as categorical or continuous. Edges between nodes can also have associated features, capturing more nuanced information about the relationships between nodes.

The core idea of GNNs is to iteratively update the node representations by passing and aggregating messages from neighboring nodes. This process, called message passing, involves computing a message vector for each neighboring node using a message function.

The message function takes the features of the sender node, receiver node and edge (if present) as input and produces a message vector. Simple element-wise operations or more complex functions like neural networks can be used for this purpose.

Once messages for all neighbors have been computed, an aggregation function is used to combine them into a single vector. Common aggregation functions include summation, average and max-pooling. Attention mechanisms can also be employed to weigh the importance of different neighbors’ messages.

The update function combines the current node’s features with the aggregated messages from its neighbors, producing an updated node representation. This message-passing process is performed through multiple layers or “hops” to capture higher-order relationships within the graph.

For graph-level tasks, such as graph classification, a readout function is required to generate a fixed-size vector representation of the entire graph. This function typically aggregates the updated node features from the final layer of message passing using operations such as summation, mean or max-pooling.

GNNs are trained using gradient-based optimization methods, such as stochastic gradient descent (SGD), or variants like Adam. The SGD method helps computers learn by gradually adjusting their predictions, using random samples of data to minimize errors over time, whereas Adam is a smart learning technique for computers that combines the best of two other methods, helping them learn faster and more accurately by fine-tuning their predictions based on past mistakes.

During the training process, the model’s parameters are updated to minimize a loss function that quantifies the difference between the model’s predictions and the ground truth labels. Once trained, the GNN can be used for inference, making predictions for tasks, such as node classification, link prediction or graph classification.

Graph neural networks vs. graph convolutional networks

Graph neural networks and graph convolutional networks are both types of deep learning methods used for analyzing graph-structured data. While they share some similarities, they also have several key differences that make them suited for different tasks.

Advantages of GNNs

Graph neural networks (GNNs) offer several advantages compared to traditional machine learning methods, as explained below:

- Scalability is a significant advantage of GNNs. They can tackle voluminous graphs with remarkable efficiency, paving the way for practical implementations involving colossal data sets. This prowess ensures their suitability in addressing real-world challenges.

- In the context of semi-supervised and unsupervised learning, GNNs showcase their prowess with aplomb. Even when presented with limited labeled data, they astoundingly generate precise predictions, rendering them invaluable in a plethora of situations.

- Robustness constitutes another critical aspect of GNNs, as they exhibit a reduced sensitivity to disturbances and discrepancies in graph formations. Consequently, these networks boast consistent performance even amid diverse circumstances, fostering dependability.

- GNNs possess the remarkable ability to adapt to evolving graph structures over time, thereby catering to the dynamic nature of networks. This characteristic empowers them to effectively scrutinize mutable systems and extract valuable insights.

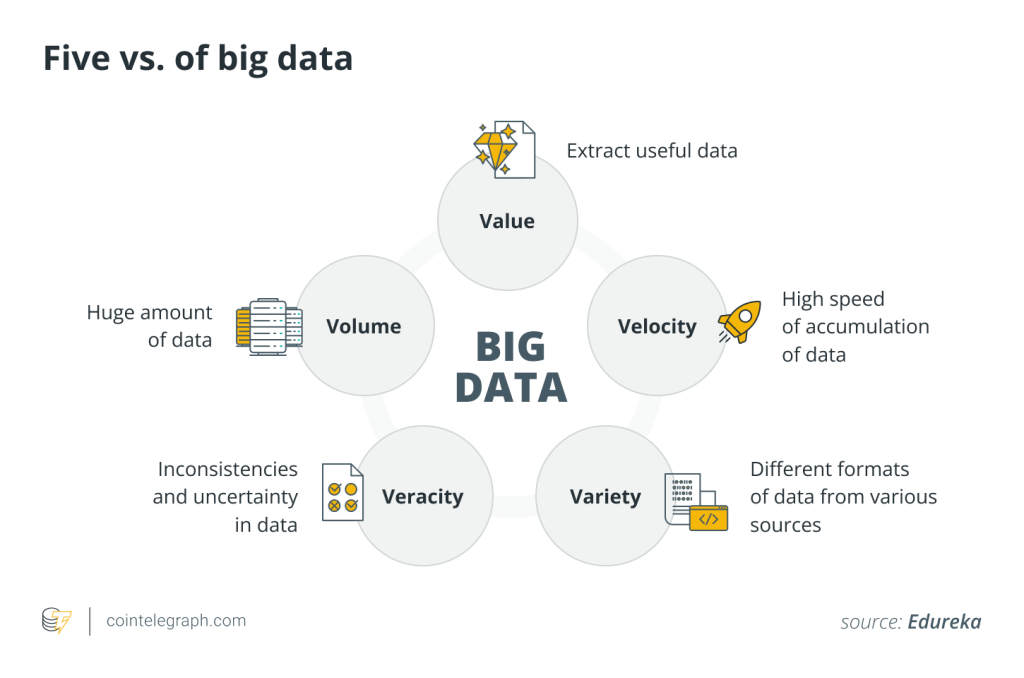

- Multimodal data processing is an integral feature of GNNs, enabling them to assimilate varied data types, including node attributes and edge weights. This versatility equips GNNs to decode the intricacies of multifaceted systems and develop a comprehensive comprehension.

- Transfer learning is a vital aspect of GNNs, allowing the application of knowledge gleaned from one domain to enhance performance in another. This cross-domain learning significantly reduces training time and contributes to improved task execution.

- Customizability is an attractive attribute of GNNs, offering researchers the flexibility to tailor models according to specific problem domains and prerequisites. This adaptability fosters the development of bespoke solutions to address unique challenges.

- Parallel processing capabilities imbue GNNs with the potential to harness parallel computing techniques, expediting learning and inference. Consequently, GNNs emerge as an ideal choice for high-performance computing applications, optimizing resource utilization.

- GNNs also exhibit compatibility with various other machine learning methodologies, including deep learning techniques. This amalgamation yields hybrid models that harness the strengths of different approaches, ultimately delivering enhanced performance across a multitude of tasks.

Can graph neural networks replace graph convolutional networks in certain applications?

While graph neural networks and convolutional neural networks are both powerful tools for analyzing different types of data, they have different strengths and weaknesses. In some cases, GNNs may be able to replace CNNs in certain applications, but this depends on the specific task at hand and the type of data being analyzed.

One area where GNNs have shown promise is in analyzing graph-structured data, such as social networks and biological molecules. GNNs are specifically designed to capture the complex relationships between nodes and edges in a graph, making them well-suited for tasks that involve this type of data. In contrast, CNNs are designed to operate on grid-like data structures, such as images, and are best suited for tasks like image classification, object detection and image segmentation.

In certain applications, it may be possible to use GNNs to replace CNNs. For example, GNNs have been used for image classification tasks by treating the image as a graph and the pixels as nodes. However, this approach is still in its early stages and requires more research to determine its effectiveness compared to traditional CNNs. The implications of GNNs potentially replacing CNNs in certain applications are significant for the future of machine learning.

GNNs have the potential to expand the scope of machine learning by enabling the analysis of more diverse types of data, such as social networks and biological molecules. This could lead to new breakthroughs in areas such as drug discovery and social network analysis. However, it is important to note that GNNs are not a one-size-fits-all solution and may not always be the best choice for every task.

Some examples of GNNs in various fields

Graph neural networks have shown great promise in a wide range of fields due to their ability to handle complex relationships between nodes and edges in a graph.

Social network analysis

GNNs have been used for tasks such as link prediction, community detection and node classification in social networks. For example, GNNs have been used to predict future connections between users in a social network based on their past interactions.

Related: What are decentralized social networks?

Drug discovery

GNNs have been used to predict the properties of small molecules and their interactions with proteins. For example, GNNs have been used to predict the binding affinity between a small molecule and a protein target, which is an important step in drug discovery.

Recommendation systems

GNNs have been used to make personalized recommendations for products, movies and other items. For example, GNNs have been used to recommend movies to users based on their previous movie ratings and the relationships between movies in a graph.

Natural language processing

GNNs have been used for tasks such as text classification and sentence similarity. For example, GNNs have been used to classify documents based on their topics and to identify similar sentences in a large corpus of text.

Computer vision

GNNs have been used for tasks such as object detection and image segmentation. For example, GNNs have been used to segment medical images and detect objects in satellite images.

These are just a few examples of successful applications of GNNs in various fields. As researchers continue to explore the capabilities of GNNs, we can expect to see even more applications in the future.

Going forward, GNNs are also set to play a crucial role in emerging technologies, such as edge computing, the Internet of Things (IoT) and the development of artificial general intelligence (advanced computer intelligence that can understand, learn and perform any intellectual task just like a human can), driving innovation and helping people better understand complex, graph-structured data. As research in GNNs progresses, individuals can look forward to a rapidly expanding range of applications, marking a new era of data analysis and insight generation.

Written by Tayyub Yaqoob

… [Trackback]

[…] Here you will find 70304 additional Info on that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] Find More Info here on that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] Find More on that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] Information on that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] There you can find 71857 more Info to that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] Info on that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] Here you can find 57902 additional Info on that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] Info to that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] Find More Information here on that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] There you can find 28336 more Info on that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] Find More Info here on that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] Read More Info here on that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] Info on that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] Information to that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] Information to that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] Read More Information here to that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] There you can find 94418 additional Info to that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] Find More on on that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] There you can find 34093 additional Information on that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] Info on that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] Read More Info here on that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] Here you will find 85703 more Information to that Topic: x.superex.com/academys/beginner/2365/ […]

… [Trackback]

[…] Info on that Topic: x.superex.com/academys/beginner/2365/ […]