What is data tokenization, and how does it compare to data encryption?

Data tokenization, explained

The process of turning sensitive data into a token or distinctive identifier while maintaining its value and link to the original data is known as data tokenization. This token stands in for the actual data and enables its use in a variety of systems and procedures without disclosing the sensitive data itself.

Since sensitive data is kept secure even if the token is intercepted or accessed by unauthorized individuals, tokenization is frequently employed as a security mechanism to protect data privacy. The original sensitive data is kept secure, and the token can be used for authorized reasons, such as data analysis, storage or sharing.

In a secure setting, a token can be momentarily connected to the original data when it is used in a transaction or activity. This prevents sensitive information from being exposed while allowing authorized systems or apps to validate and process the tokenized data.

What is the purpose of data tokenization?

Enhancing data security and privacy is the main goal of data tokenization. Sensitive data is replaced with distinct tokens to secure it from unauthorized access, lowering the risk of data breaches and minimizing the effects of any security incidents.

Data tokenization serves the following purposes:

Data protection

Tokenization prevents sensitive data from being kept or sent in its original form, including credit card numbers, social security numbers and personal identifiers. This lessens the chance of unwanted access or data breaches and decreases the exposure of sensitive data.

Compliance

When it comes to credit card data, the Payment Card Industry Data Security Standard (PCI DSS) is one of the industry standards that data tokenization helps firms abide by. Tokenizing sensitive data allows enterprises to reduce the amount of sensitive data they handle and the breadth of compliance audits, improving the effectiveness of compliance management.

Reduced risk

Storing and sending sensitive data carries a lower risk thanks to tokenization. Without access to the tokenization system or database, the tokens are worthless, even if the tokenized data is intercepted or accessed by unauthorized parties.

Simplified data handling

For a variety of processes, including transaction processing, data analysis or storage, tokens can be used in place of sensitive data. As tokens may be processed and managed interchangeably with the original data without requiring decryption or disclosing sensitive information, this streamlines data handling procedures.

Data integrity

Tokenization replaces the original data with tokens while maintaining the integrity and format of the original data. As a result, tokenized data can be used smoothly by authorized systems and applications since the tokens have certain properties that the original data did not lose.

Data tokenization techniques

The common data tokenization techniques are explained below:

Format preserving tokenization

Tokens created using the format-preserving tokenization technique retain the original data’s format and length. An example of a tokenized number is another 16-digit number created from a 16-digit credit card number.

Secure hash tokenization

In this method, tokens are created using a secure one-way hash function, such as SHA-256. Reverse engineering the original value from the token is computationally impossible since the hash function transforms the original data into a fixed-length string.

Randomized tokenization

Using random tokens that have no connection to the original data is known as randomized tokenization. The tokens are safely kept in a tokenization system where they can be quickly mapped back to the original data.

Split tokenization

Split tokenization separates the sensitive data into segments and tokenizes each piece independently. By distributing the data over various systems or locations, this strategy improves security.

Cryptographic tokenization

This method combines tokenization and encryption. A robust encryption algorithm is used to encrypt the sensitive data, and the value of the encrypted data is then tokenized. To enable decryption when required, the encryption key is securely managed.

Detokenization

Recovering the original data from the token is the process of undoing tokenization. The token-to-data mapping must be stored and managed securely for this process to work.

How data tokenization works

Sensitive data must be converted into tokens while maintaining its value and connection to the original data through a process called data tokenization. Here’s how data tokenization works:

Identification of sensitive data

Sensitive data pieces that need to be tokenized, such as credit card numbers, social security numbers or personal identifiers, must first be identified.

Tokenization system

A platform, or tokenization system, is created to manage the tokenization process. Secure databases, encryption keys and algorithms for creating and managing tokens are frequently included in this system.

Data mapping

To link sensitive data with its appropriate tokens, either a mapping table or database is constructed. The relationship between the original data and the tokenized data is maintained because of the secure storage of the mapping.

Token generation

The tokenization system creates a special token to replace the sensitive data when it needs to be tokenized. Typically, the token is a numerical value or a string of letters created at random.

Data substitution

The created token is used to replace the sensitive data, which can be tokenized in batch procedures or in real-time during data entry.

Tokenized storage data

A tokenization database is used to safely store tokenized data together with any related metadata or background information. In order to ensure that even if the tokenized data is compromised, it cannot be used to retrieve the original sensitive data because the sensitive data is not stored in its original form.

Tokenized data usage

Authorized systems or apps employ tokens rather than the original sensitive data when processing tokenized data. For tasks like transactions, analysis or storage, the tokens are moved through the system.

Token-to-data retrieval

The tokenization system uses the mapping table or database to go back in time and obtain the relevant sensitive data if it is necessary to retrieve the original data that was linked to a token.

To safeguard the tokens, mapping information and the tokenization infrastructure itself, the tokenization system must have strong security measures in place. These procedures allow authorized systems to engage with tokenized data for legal purposes without disclosing the underlying sensitive information, making data tokenization a secure approach for handling sensitive data.

What are the benefits of data tokenization?

For enterprises looking to improve data security and privacy, data tokenization offers a number of advantages, including:

Improved data security

Sensitive data is replaced with tokens through tokenization, lowering the possibility of unwanted access and data breaches. Even if tokens are intercepted, attackers cannot use them because they are meaningless without access to the tokenization system.

Compliance with regulations

Data tokenization assists enterprises in adhering to industry standards for data protection and regulatory needs. For instance, by limiting the scope of sensitive data storage and lowering the risk involved with managing payment card information, tokenization can help with compliance with the PCI DSS.

Preserved data integrity

Tokenization keeps the original data’s structure and integrity intact. Since the tokens preserve some details of the original data, authorized systems can use tokenized data without losing the integrity or correctness of the data.

Simplified data handling

By allowing tokens to be utilized interchangeably with the original data, tokenization streamlines data handling procedures. Data operations are more effective because authorized systems and applications can handle and maintain tokens without requiring decryption or disclosing sensitive information.

Risk reduction

Organizations reduce the risk involved with keeping and transferring sensitive data by tokenizing it. The risk of data breaches is greatly minimized because tokens have no inherent value and cannot be used for unauthorized purposes.

Scalability and flexibility

Tokenization is a scalable method that may be used for a variety of sensitive data types. It can be applied across numerous platforms and programs, enabling scalability as business requirements change.

Increased client trust

By showcasing a dedication to data protection and security, tokenization increases client trust. Customers are more inclined to trust companies with their data if they are aware that their sensitive information is tokenized and protected.

Reduced compliance effort

Tokenization can ease the effort of conducting compliance audits and protecting sensitive data. The scope of compliance evaluations and audits is narrowed by minimizing the amount of sensitive data in the organization’s systems.

Data tokenization example

Consider a supply chain management system that tracks and confirms the authenticity of premium items using blockchain technology. Each luxury item in this system is given a special digital token that signifies who owns it and where it came from.

The brand, model and serial number of a luxury good are all recorded on the blockchain when it is produced or introduced into the supply chain. Following the tokenization of this data, a digital token representing the particular item is created and stored on the blockchain.

The original luxury item, a Rolex Submariner watch with the serial number 123456789, can be tokenized into a unique token, such as Token123456789, in the context of a blockchain-based supply chain system. On the blockchain, this token acts as a safe representation of the item’s ownership and provenance.

The premium item has now been tokenized and is safely kept on the blockchain along with its one-of-a-kind identity. Each transaction or transfer of ownership as the luxury item travels through the supply chain is documented on the blockchain, ensuring transparency and immutability.

Several advantages come from the tokenization of luxury goods on the blockchain. The digital token serves as a safe and impervious version of the tangible object, making it simple to trace and verify the item’s validity. Additionally, by preventing unauthorized changes or tampering with the item’s data on the blockchain, the tokenization process improves security.

Are there any risks of data tokenization?

While data tokenization has many advantages, there are also some hazards and things to think about that businesses should be aware of. The following are a few concerns connected with data tokenization:

Tokenization system vulnerabilities

The security of the tokenization system is essential. Attackers might be able to reverse-engineer tokens and obtain private data if the tokenization system is compromised, which could result in unauthorized access to the tokenized data or the mapping table.

Dependence on tokenization infrastructure

As tokenization infrastructure becomes more readily available, more organizations will be dependent on it. Operations that rely on tokenized data may be hampered by outages or other disturbances in the tokenization system.

Challenges with data conversion

Tokenization may call for changes to current programs and systems in order to support the use of tokens. The time and potential difficulties associated with adapting current data and systems to work with tokenized data must be taken into account by organizations.

Tokenization limitations

Not all types of data or use cases may be appropriate for tokenization. Implementing tokenization may be difficult if particular data elements, such as those with complicated relationships or structured data needed for specific processes, are involved.

Complexity of tokenization implementation

Creating a successful tokenization system needs careful planning and integration with current systems and procedures, which adds to its complexity. The maintenance of mapping tables, secure storage of tokens and sensitive data, and proper token production can all add complexity.

Regulatory considerations

While tokenization can help with compliance, organizations must make sure that their tokenization strategies adhere to all applicable regulations. To avoid any penalties or non-compliance issues, it is crucial to comprehend the legal and regulatory ramifications of tokenization.

Tokenization key management

Secure tokenization depends on efficient key management. Organizations are responsible for making sure that the encryption keys used in the tokenization process are generated, stored and rotated properly. The security of the tokens and the underlying sensitive data may be jeopardized by poor key management procedures.

Token-to-data mapping integrity

The integrity of the token-to-data mapping table or database must be upheld in order for it to be accurate and reliable. Any mistakes or discrepancies in the mapping could lead to problems with data integrity or make it harder to get the original data when needed.

Data tokenization vs. data encryption

Data tokenization substitutes sensitive data with non-sensitive tokens, whereas data encryption uses cryptographic techniques to convert data into an unreadable format. Data tokenization and data encryption are both security methods, but which one to use depends on a variety of criteria, including the desired level of data protection, compliance requirements, and unique use cases.

Here are the key differences between the two:

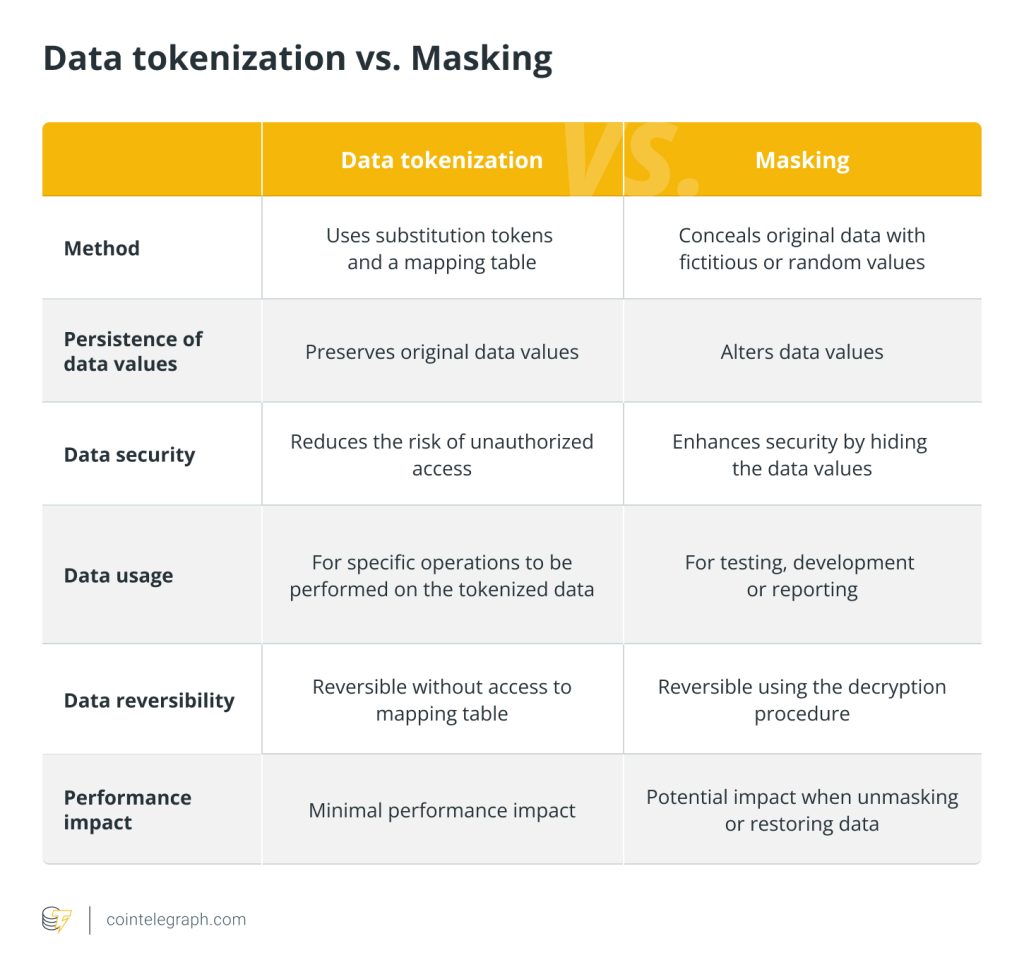

Data tokenization vs. masking

Data tokenization substitutes sensitive data with non-sensitive tokens while keeping a relationship to the original data, while data masking obscures the actual data with fictitious or random values to safeguard sensitive information.

Here are the key differences between the two:

Data tokenization tools

Organizations can use a variety of data tokenization products on the market to implement data tokenization and improve data security. Several prominent data tokenization tools include:

IBM Guardium Data Protection

It is a platform for data security that provides data tokenization as part of its full range of data protection capabilities. It provides machine learning techniques to find suspicious activities surrounding private data kept in databases, data warehouses and other environments with structured data.

Protegrity

Protegrity is a comprehensive platform for data protection that includes data tokenization as one of its key features. To safeguard sensitive data, it offers adaptable tokenization strategies and cutting-edge encryption technologies.

TokenEx

TokenEx is a platform for tokenization that assists organizations in protecting sensitive data by substituting non-sensitive tokens. It provides flexible tokenization techniques and strong security controls.

Voltage SecureData

It is a security system that is data-centric and has data tokenization capabilities. It gives businesses the ability to tokenize data at the field or file level, enhancing data security.

Proteus Tokenization

It is a data tokenization solution that enables businesses to tokenize and secure private information across numerous databases and systems. It offers centralized control over and oversight of tokenization procedures.

Written by Onkar Singh

… [Trackback]

[…] Here you will find 23854 additional Information on that Topic: x.superex.com/academys/beginner/2234/ […]

… [Trackback]

[…] Read More here on that Topic: x.superex.com/academys/beginner/2234/ […]

… [Trackback]

[…] Find More Info here on that Topic: x.superex.com/academys/beginner/2234/ […]

… [Trackback]

[…] Find More Information here on that Topic: x.superex.com/academys/beginner/2234/ […]

… [Trackback]

[…] Find More on to that Topic: x.superex.com/academys/beginner/2234/ […]

… [Trackback]

[…] Read More on to that Topic: x.superex.com/academys/beginner/2234/ […]

… [Trackback]

[…] There you will find 99974 additional Info on that Topic: x.superex.com/academys/beginner/2234/ […]

… [Trackback]

[…] Read More on that Topic: x.superex.com/academys/beginner/2234/ […]

… [Trackback]

[…] Information on that Topic: x.superex.com/academys/beginner/2234/ […]

… [Trackback]

[…] Read More on to that Topic: x.superex.com/academys/beginner/2234/ […]

… [Trackback]

[…] Find More on to that Topic: x.superex.com/academys/beginner/2234/ […]

… [Trackback]

[…] Find More to that Topic: x.superex.com/academys/beginner/2234/ […]

… [Trackback]

[…] Info to that Topic: x.superex.com/academys/beginner/2234/ […]