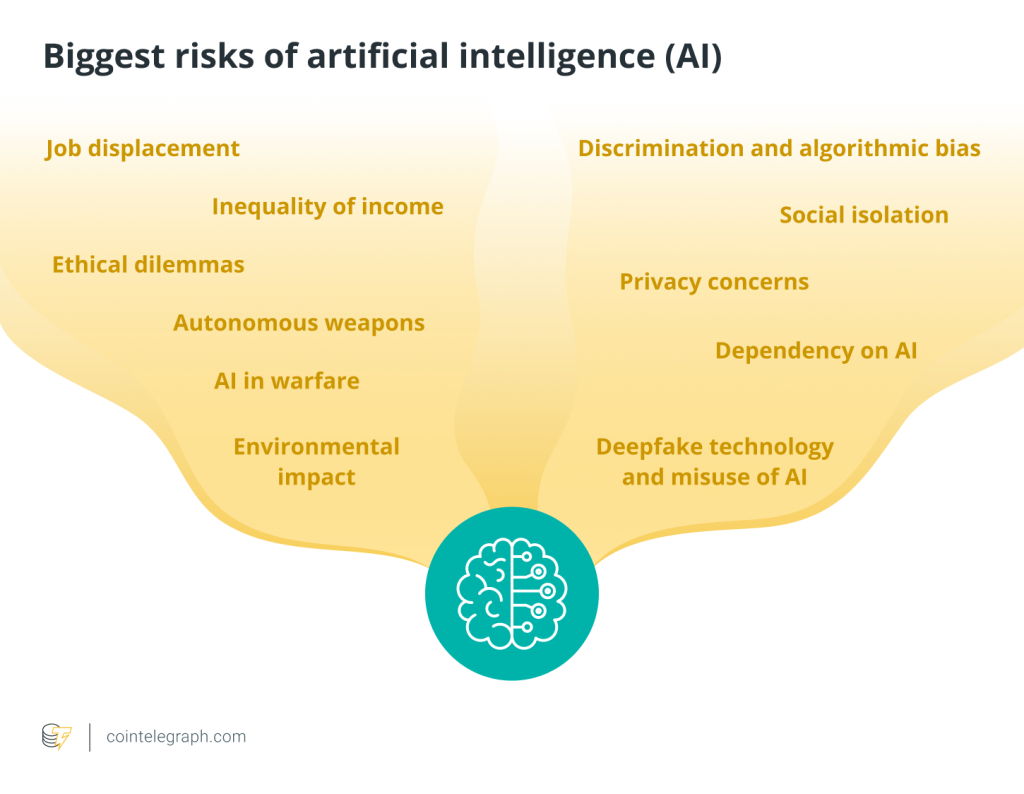

What are the dangers of artificial intelligence?

Is artificial intelligence a threat to humans?

Although artificial intelligence (AI) isn’t intrinsically a threat to people, its use can be dangerous if it’s not used appropriately, just like any other powerful tool. Issues like employment displacement, algorithmic biases, invasions of privacy and possible abuse of autonomous weapons or surveillance systems are among the common concerns.

The key lies in how people create, govern and apply AI. The responsible development of AI requires moral standards, open-source algorithms and safeguards against abuse. Ultimately, humans must ensure AI advances humankind and does not pose unwarranted risks.

Negative effects of artificial intelligence

Unquestionably, AI has brought efficiency and innovation to many facets of our lives. But its widespread use also has drawbacks, which should be carefully considered. Prominent tech leaders, including Steve Wozniak, Elon Musk and Stephen Hawking, signed an open letter in 2015 highlighting the need for extensive research into the social effects of AI.

The issues raised include safety concerns about self-driving cars and ethical discussions about autonomous weapons used in conflicts. The letter also predicts a concerning future in which humans may no longer be able to control AI and may even lose control over its goals and functions due to a lack of caution. AI’s impact comes with negative consequences, from ethical dilemmas to socio-economic disruptions.

Job displacement

Traditional job roles across various industries are at risk due to AI’s automation capabilities. AI-driven systems can replace repetitive jobs in manufacturing, customer service, data entry and even specialized fields like accounting and law. This relocation may result in unemployment, necessitating retraining in new skills and environment adaptation.

Inequality of income

The application of AI technology has the potential to worsen economic disparity. Smaller companies find it challenging to keep up with larger companies with the resources to invest in AI. The gap in access to AI-powered tools has the potential to exacerbate the economic divide between giant corporations and smaller businesses or individuals.

Privacy concerns

A lot of data is gathered and analyzed by AI systems. This reliance poses serious privacy issues. Massive personal data collection by AI systems raises the possibility of data breaches and misuse. The security and privacy of sensitive information can be jeopardized by unauthorized access or unethical use.

For instance, Meta’s AI algorithms faced scrutiny for their data handling practices and potential privacy violations.

Discrimination and algorithmic bias

Because AI algorithms are created using existing data, they may reinforce societal biases. This prejudice can take many forms, such as biased court rulings or discriminatory hiring practices. Unchecked AI systems have the potential to strengthen preexisting inequalities and produce unfair results by amplifying societal prejudices.

Moreover, facial recognition technologies have exhibited racial and gender biases. Studies found that some systems misidentified individuals from certain ethnic groups more frequently.

Ethical dilemmas

AI raises complex moral questions. For example, moral dilemmas about making decisions in life-threatening situations arise with autonomous vehicles. The development of AI-powered weapons also raises questions about the morality of autonomous weaponry and the possibility of uncontrollably escalating hostilities.

Social isolation

Increased social isolation could result from developments in AI-driven technologies like virtual assistants and social robots. Over-reliance on AI for social interaction can negatively affect mental health and interpersonal relationships by eroding genuine human connections.

Dependency on AI

An over-reliance on AI systems is a risk that comes with its increased pervasiveness. Relying too much on AI to make crucial decisions in industries like finance or healthcare could result in less human oversight and jeopardize accuracy and accountability.

Autonomous weapons

The advent of AI-powered autonomous weapons signals a worrying change in conflict. The fact that these machines are run autonomously by humans presents significant moral and practical challenges. The absence of human oversight in combat decision-making is a considerable worry. The speed and autonomy of these weapons raise questions about their ability to discern ethical or moral considerations in chaotic situations.

AI in warfare

Furthermore, because AI systems make decisions rapidly, using them in war carries a considerable risk of worsening hostilities. These uncertainties are heightened by the lack of established international regulations governing the use of AI in warfare, highlighting the pressing need for moral standards and multinational agreements to reduce potential risks.

Deepfake technology and misuse of AI

Despite its impressive technological capabilities, deepfake technology poses severe risks to truth and authenticity in various situations. The accuracy of facts is in danger due to these incredibly lifelike synthetic media creations that can pass for real. One of the main concerns is the misuse of deepfakes to disseminate propaganda and false information. Malicious actors can use this technology to fabricate stories to sway public opinion, cause chaos or harm reputations.

Furthermore, it is concerning that deepfake technology can breach people’s privacy by creating explicit content or altering people’s voices and images. The challenge of differentiating genuine content from deepfake productions makes countering their spread more complex, highlighting the vital need for creative detection techniques and strict laws to maintain credibility and authenticity in the media and information sharing.

Environmental impact

Significant computational power is needed to meet the computational demands of AI, particularly for deep learning algorithms. The environment is strained due to this demand, raising energy consumption and carbon emissions.

Can AI replace humans?

By 2030, the estimated $100 billion global AI market is predicted to have grown twenty times to almost $2 trillion, according to Statista.com. AI has developed quickly, becoming proficient in data analysis, task automation and decision support.

But its powers are insufficient to replicate the depth and breadth of human abilities. In addition, artificial intelligence lacks humans’ creativity, emotional intelligence and adaptability, but it is skilled in specialized fields like manufacturing and medical diagnostics.

Crucially, AI owes its existence and evolution to human ingenuity. AI systems are designed and created by humans, so they are limited in what they can do. They cannot replicate the emotional nuances, creativity or intuition that characterize human thought. While Google’s DeepMind, for instance, may be able to accurately diagnose eye conditions and help with early medical intervention, human expertise conceptualized and developed such AI systems.

Moreover, AI can still not match human social and emotional intelligence, complex ethical reasoning, contextual awareness, adaptability or leadership. AI is efficient in specific tasks, as demonstrated by Amazon’s use of AI-driven robots to streamline warehouse operations. These are just a few examples of the practical applications of AI. However, the conception, design and ethical considerations of these AI applications are still very much human endeavors.

Because AI is good at processing large amounts of data and humans are good at creativity, empathy and complex decision-making, working together often produces the best results. The distinct qualities of human cognition, rooted in experiences, emotions and contextual understanding, currently make complete replacement by AI an improbable scenario.

What is the solution to the dangers of AI?

Addressing the risks associated with AI requires a multifaceted approach centered on ethical considerations, regulation and responsible development. Firstly, creating thorough ethical frameworks that emphasize accountability, justice and transparency is imperative. Rules must be implemented to guarantee that AI systems follow moral principles and lessen prejudice and discrimination.

Furthermore, encouraging cooperation between policymakers, technologists, ethicists and stakeholders can help create regulations prioritizing people’s safety and well-being. It takes oversight mechanisms, audits and ongoing monitoring to ensure these standards are followed.

AI biases can be lessened by promoting inclusive and diverse development teams. The ethical application of AI should be emphasized in education and awareness campaigns. To maximize the benefits of AI while reducing its risks, a collective effort involving ethical standards, legal restrictions, diverse viewpoints and continual assessment is essential.

Written by Tayyub Yaqoob

… [Trackback]

[…] Read More here to that Topic: x.superex.com/academys/beginner/1961/ […]

… [Trackback]

[…] Find More Info here to that Topic: x.superex.com/academys/beginner/1961/ […]

… [Trackback]

[…] Info to that Topic: x.superex.com/academys/beginner/1961/ […]

… [Trackback]

[…] Information on that Topic: x.superex.com/academys/beginner/1961/ […]

… [Trackback]

[…] Info on that Topic: x.superex.com/academys/beginner/1961/ […]

… [Trackback]

[…] Find More Information here on that Topic: x.superex.com/academys/beginner/1961/ […]

… [Trackback]

[…] Read More to that Topic: x.superex.com/academys/beginner/1961/ […]

… [Trackback]

[…] Info on that Topic: x.superex.com/academys/beginner/1961/ […]

… [Trackback]

[…] Information to that Topic: x.superex.com/academys/beginner/1961/ […]

… [Trackback]

[…] Find More Info here to that Topic: x.superex.com/academys/beginner/1961/ […]

… [Trackback]

[…] Read More to that Topic: x.superex.com/academys/beginner/1961/ […]

… [Trackback]

[…] Find More on to that Topic: x.superex.com/academys/beginner/1961/ […]

… [Trackback]

[…] Find More to that Topic: x.superex.com/academys/beginner/1961/ […]

… [Trackback]

[…] Find More Info here to that Topic: x.superex.com/academys/beginner/1961/ […]

… [Trackback]

[…] Read More Info here on that Topic: x.superex.com/academys/beginner/1961/ […]

… [Trackback]

[…] Here you can find 85611 additional Information to that Topic: x.superex.com/academys/beginner/1961/ […]