Nvidia CEO’s simple solution to AI hallucination could upend crypto — but only if it works

The only problem: Jensen Huang’s solution is already out there, and AI systems still hallucinate.

Nvidia CEO Jensen Huang recently went on the record to say that it was his belief that human-level artificial intelligence (AI) will probably be realized within the next five years and that hallucinations, one of the field’s biggest challenges, will be simple to solve.

Huang’s comments came during a speech at the Nvidia GTC developers conference in San Jose, California, on March 20.

During the event’s keynote speech, Huang addressed the idea of artificial general intelligence (AGI). According to a report from TechCrunch, the CEO told reporters that the arrival of AGI was an issue of benchmarking:

“If we specified AGI to be something very specific, a set of tests where a software program can do very well — or maybe 8% better than most people — I believe we will get there within 5 years.”

It’s unclear exactly what kind of tests Huang was referring to. The term “general” in the context of artificial intelligence typically refers to a system that, regardless of the benchmark, is capable of anything a human of average intelligence could do given sufficient resources.

He also went on to discuss “hallucinations,” an unintended result of training large language models to act as generative AI systems. Hallucinations occur when AI models output new, usually incorrect, information that isn’t contained in its data set.

According to Huang, solving hallucinations should be a simple matter. “Add a rule: For every single answer, you have to look up the answer,” he told audience members before adding, “The AI shouldn’t just answer; it should do research first to determine which of the answers are the best.”

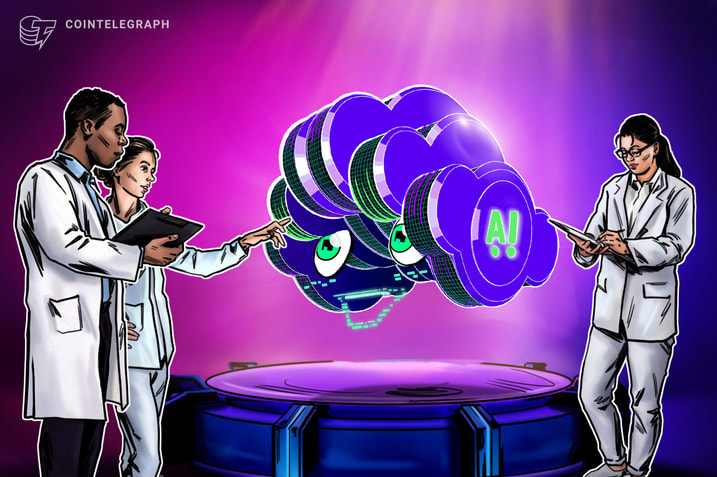

Setting aside the fact that Microsoft’s CoPilot AI, Google’s Gemini, OpenAI’s ChatGPT and Anthropic’s Claude 3 all feature the ability to provide sources for their outputs from the internet, if AI’s hallucination problem were to be solved once and for all, it could revolutionize myriad industries including finance and crypto.

Currently, the makers of the aforementioned systems advise caution when using generative AI systems for functions where accuracy is important. The user interface for ChatGPT, for example, warns that “ChatGPT can make mistakes,” and advises users to “consider checking important information.”

In the world of finance and cryptocurrency, accuracy can spell the difference between profit and loss. This means, under the current status quo, generative AI systems have limited functionality for finance and crypto professionals.

Related: CFTC warns AI cannot pick your next crypto winner

While experiments involving trading bots powered by generative AI systems exist, they’re usually hardbound by rules to prevent autonomous execution — meaning they’re preprogrammed to execute trades in a tightly controlled manner similar to placing limit orders.

If generative AI models didn’t suffer from hallucinating entirely fabricated outputs, then, ostensibly, they should be capable of conducting trades and making financial recommendations and decisions entirely independently of human input. In other words, if the problem of hallucinations in AI was solved, it’s possible fully automated trading would become a reality.

Responses