Andrew Yang and 300 international experts petition for government action on AI deepfakes

A coalition of over 300 technology, AI and digital ethics experts worldwide advocates for government intervention to combat deepfakes, citing concerns over their societal impact.

Over 300 experts in the fields of technology, artificial intelligence (AI), digital ethics and child safety from around the world have come together to sign an open letter urging governments to take “immediate action” to tackle deepfakes.

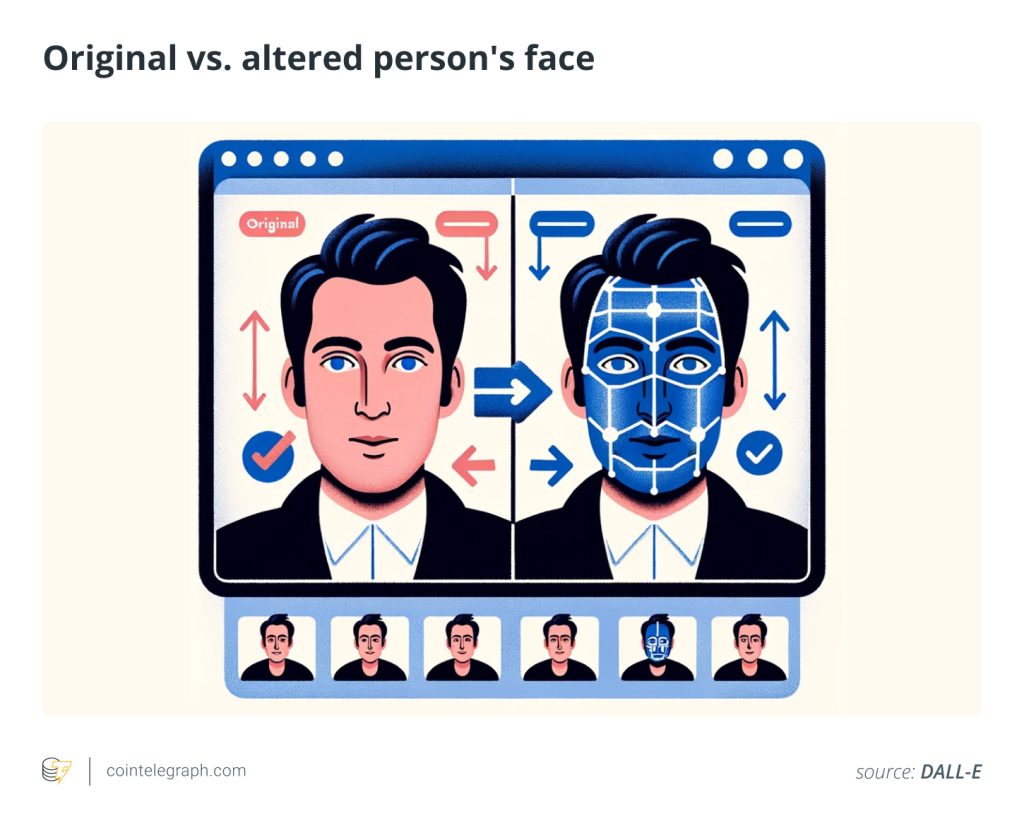

The letter, released on Feb. 21 and titled “Disrupting the Deepfake Supply Chain,” urges governments, policymakers and political leaders to “impose obligations” in the supply chain to halt the proliferation of deepfakes.

It lays out three main courses of action, the first being to fully criminalize deepfake child pornography — even with fictional children.

It also petitions for criminal penalties for anyone who “knowingly creates or facilitates the spread of harmful deepfakes,” along with requirements for software developers and distributors to ensure their media products don’t create harmful deepfakes and penalties if their measures are not up to standard.

Signatories of the letter include United States politician and crypto supporter Andrew Yang, cognitive psychologist Steven Pinker, two former presidents of Estonia and hundreds of other intellectuals from countries including the U.S., Canada, the United Kingdom, Australia, Japan and China.

Andrew Critch, an AI researcher at the University of California, Berkeley in the Department of Electrical Engineering and Computer Science and lead author of the letter, said:

“Deepfakes are a huge threat to human society and are already causing growing harm to individuals, communities, and the functioning of democracy.”

He said he and his colleagues created the letter so the global public could show support for law-making efforts to stop deepfakes with “immediate action.”

Related: Security researchers unveil deepfake AI audio attack that hijacks live conversations

Joy Buolamwini, founder of the Algorithmic Justice League and author of Unmasking AI, said that the need for “biometric rights” has become “ever more apparent.”

“While no one is immune to algorithmic abuse, those already marginalized in society shoulder an even larger burden. 98% of non-consensual deepfakes are of women and girls. That is why it is up to all of us to speak out against harmful uses of AI.”

She said there must be “high consequences for egregious abuses of AI.”

Other organizations involved in the creation and dissemination of the letter include the Machine Intelligence and Normative Theory Lab and the Center for AI Safety.

In the U.S., current legislation does not sufficiently target and prohibit the production and dissemination of deepfakes. However, recent events have prompted regulators to act more quickly.

As a response to a wave of viral deepfakes involving pop singer Taylor Swift flooding social media platforms, lawmakers in the U.S. began urging for quick action legislation to criminalize the production of deepfake images.

A few days later, the U.S. banned AI-generated voices used in scam robocalls after the circulation of a deepfake using the likeness of President Joe Biden. On Feb. 16, the U.S. Federal Trade Commission proposed updates to regulations that prohibit the impersonation of businesses or government agencies by AI for consumer protection purposes.

Magazine: Crypto+AI token picks, AGI will take ‘a long time’, Galaxy AI to 100M phones: AI Eye

Responses