OpenAI text-to-video model Sora wows X but still has weaknesses

OpenAI’s latest AI video generation tool, Sora, has left social media users in awe over its realism, though it’s not yet ready for a full public release.

Artificial intelligence firm OpenAI unveiled its first-ever text-to-video model to a strong reception on Thursday, though the firm admits the model still has a ways to go.

OpenAI unveiled the new generative AI model, dubbed Sora, on Feb. 15, which is said to create detailed videos from simple text prompts, continue existing videos, and even generate scenes based on a still image.

Introducing Sora, our text-to-video model.

Sora can create videos of up to 60 seconds featuring highly detailed scenes, complex camera motion, and multiple characters with vibrant emotions. https://t.co/7j2JN27M3W

Prompt: “Beautiful, snowy… pic.twitter.com/ruTEWn87vf

— OpenAI (@OpenAI) February 15, 2024

According to a Feb. 15 blog post, OpenAI claimed the AI model can generate movie-like scenes in up to resolution up to 1080p. These scenes can include multiple characters, specific types of motion, and accurate details of the subject and background.

How Sora works

Much like OpenAI’s image-based predecessor DALL-E 3, Sora operates on what’s known as a “diffusion” model.

Diffusion refers to a generative AI model creating its output by generating a video or an image with something that looks more like “static noise” and then gradually transforming it by “removing the noise” over several steps.

Announcing Sora — our model which creates minute-long videos from a text prompt: https://t.co/SZ3OxPnxwz pic.twitter.com/0kzXTqK9bG

— Greg Brockman (@gdb) February 15, 2024

The AI firm wrote that Sora has been built on past research from both GPT and DALL-E3 models, something the firm claims makes the model better at more “faithfully” representing user inputs.

OpenAI admitted that Sora still contained several weaknesses, and could struggle to accurately simulate the physics of a complex scene, namely by muddling up the nature of cause and effect.

“For example, a person might take a bite out of a cookie, but afterward, the cookie may not have a bite mark.”

The new tool can also confuse the “spatial details” of a given prompt by mixing up lefts and rights or failing to follow precise descriptions of directions, said the firm.

OpenAI said the new generative model is only available for now to “red teamers” — tech parlance for cybersecurity researchers — to assess “critical areas for harms or risks” as well as select designers, visual artists, and filmmakers to gather feedback on how to advance the model.

In December 2023, a report from Stanford University revealed that AI-powered image-generation tools using the AI database LAION, were being trained on thousands of images of illegal child abuse material, something that raises serious ethical and legal concerns for text-to-image or video models.

Users on X left “speechless”

Dozens of video demos have been circulating on X showing examples of Sora in action, while Sora is now trending on X with over 173,000 posts.

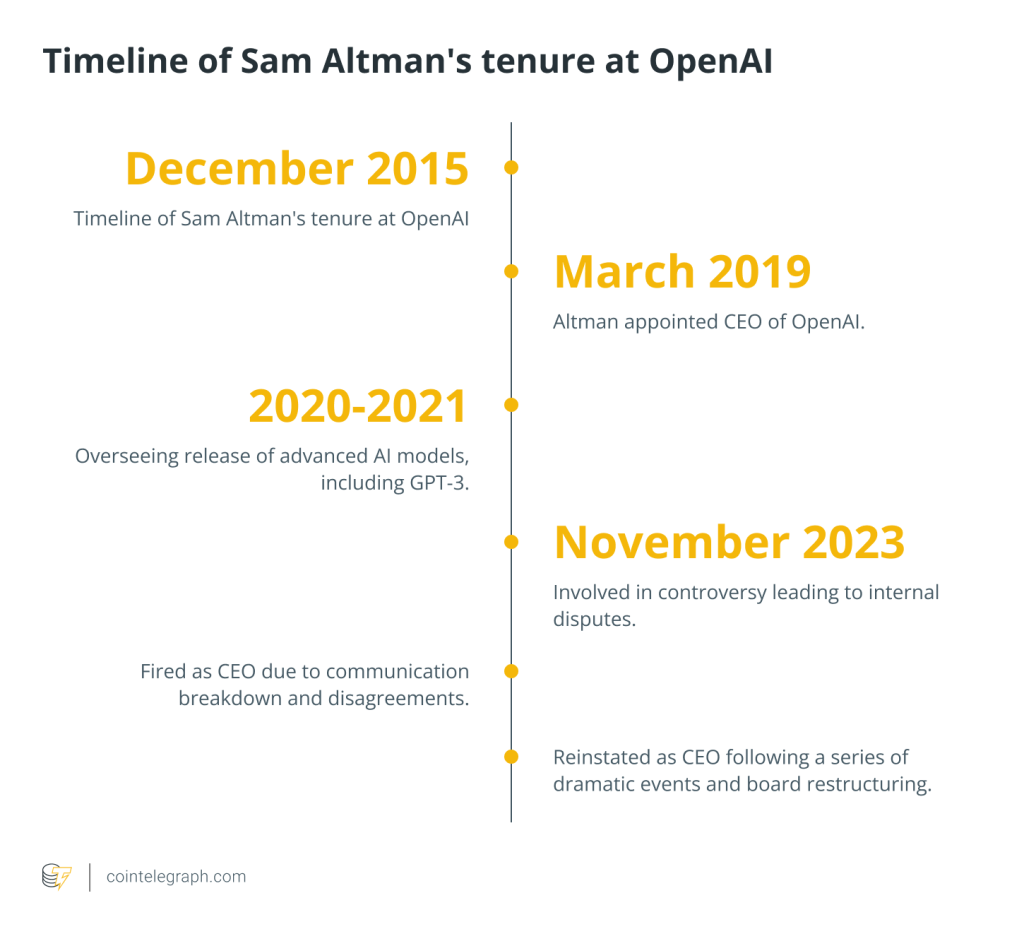

In a bid to show off what the new generative model is capable of, OpenAI CEO Sam Altman opened himself up to custom video-generation requests from users on X, with the AI chief sharing a total of seven Sora-generated videos, varying from a duck on dragon back to golden retrievers recording a podcast on a mountain top.

https://t.co/uCuhUPv51N pic.twitter.com/nej4TIwgaP

— Sam Altman (@sama) February 15, 2024

AI commentator Mckay Wrigley — along with many others — wrote that the video generated by Sora had left him “speechless.”

In a Feb. 15 post to X, Nvidia senior researcher Jim Fan declared that anyone who believed Sora to be just another “creative toy,” like DALL-E 3, would be dead wrong.

If you think OpenAI Sora is a creative toy like DALLE, … think again. Sora is a data-driven physics engine. It is a simulation of many worlds, real or fantastical. The simulator learns intricate rendering, "intuitive" physics, long-horizon reasoning, and semantic grounding, all… pic.twitter.com/pRuiXhUqYR

— Jim Fan (@DrJimFan) February 15, 2024

In Fan’s view, Sora is less a video-generation tool and more a “data-driven physics engine,” as the AI model isn’t just generating abstract video but also deterministically creating the physics of objects in the scene itself.

… [Trackback]

[…] Here you will find 68006 additional Information on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Find More here to that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Information on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Find More Information here on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Find More on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Read More Info here to that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Read More on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Find More here to that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Find More to that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Find More Information here on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Information on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Info on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Read More on on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Info on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Find More here to that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Here you will find 35558 more Info to that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Here you can find 94458 additional Information on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Information on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Read More Information here on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Read More here to that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] There you can find 74636 more Info on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] There you can find 62404 additional Information to that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Read More Info here to that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Information on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Find More on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Find More Information here to that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Find More here on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Here you can find 3857 additional Info on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Find More here on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Information to that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Find More on on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Find More Info here to that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Find More Information here to that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] There you can find 63257 additional Information to that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Information to that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Information on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Find More here to that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Read More on that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Read More Information here to that Topic: x.superex.com/news/ai/4492/ […]

… [Trackback]

[…] Find More on that Topic: x.superex.com/news/ai/4492/ […]