What is black-box AI, and how does it work?

Black-box models became frequently used solutions in artificial intelligence (AI) systems due to their highly accurate results. However, they often lack legitimacy thanks to the inherited hidden nature of their operations. Researchers are trying to break into their dark system by combining them with widely accepted and well-understandable white-box models.

What is black box AI?

In recent years, there鈥檚 been an ongoing discussion in the scientific community about the adequate usage of black-box artificial intelligence models (BAI). BAI refers to artificial intelligence (AI) systems, where the internal workings of the AI algorithms are hidden or unknown even to the designers.

聽BAI models are often very accurate and effective at making predictions, but because of their lack of transparency and interoperability, they are not always well-received in certain sectors and industries, such as finance, healthcare or the military.

There are even indirect legal restrictions that limit their applications. In the United States, for example, the Equal Credit Opportunity Act does not allow the rejection of credits to customers if the reason for denial is unexplainable, making black box models difficult to use.聽

A similar example is for indirect regulations the European Union鈥檚 General Data Protection Regulation or the California Consumer Privacy Act. They indirectly affect the usage of black-box models by limiting the collection, storage and processing of personal data. They also require implicit consent for data usage with the aim of providing individuals with the right to understand how their data is being used.

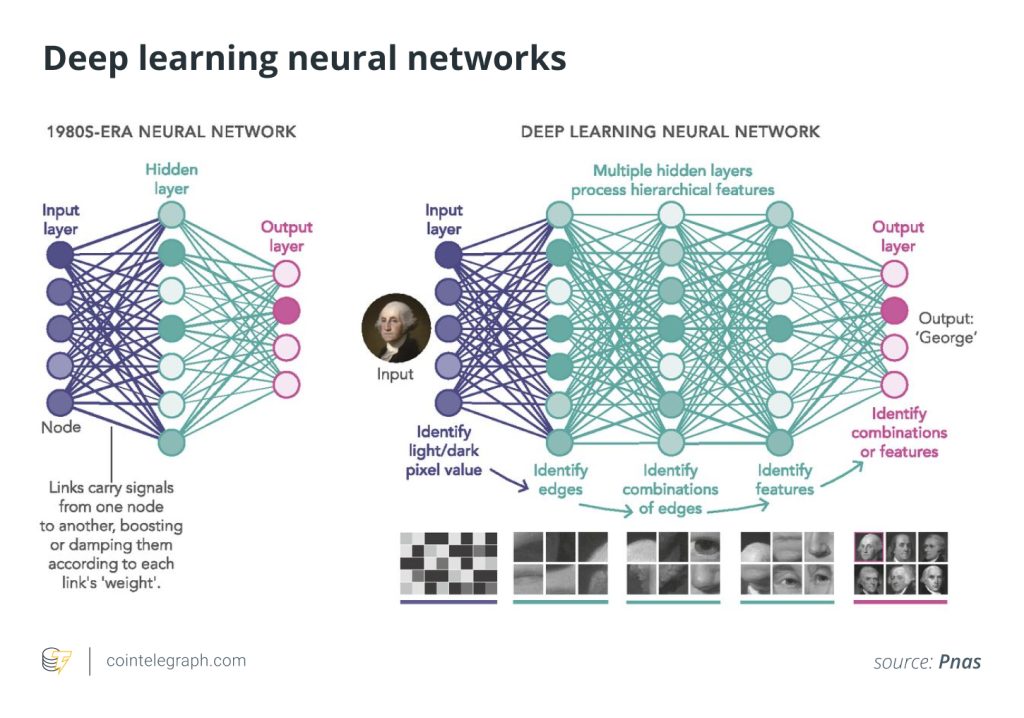

Often, used representations are deep learning algorithms. Deep learning is a subfield of machine learning models that focuses on training deep neural networks. Deep neural networks are networks with multiple hidden layers between the input and output layers. These deep architectures enable them to learn and represent increasingly abstract and complex patterns and relationships in the data.聽

The ability to learn hierarchical representations is a key factor behind the success of deep learning in various tasks, such as image and speech recognition, natural language processing, and reinforcement learning.

The potential alternatives of BAI are white-box models. White-box models are designed to be transparent and explainable. The user can see what features the model is basing its decisions on and can understand the reasoning behind the model鈥檚 predictions. This transparency raises white-box AI models鈥?/a> accountability and makes model validation and model auditing easier, which is important in applications where the consequences of errors can be significant.

How does BAI work?

The expression black box does not refer to a specific methodology 鈥?it鈥檚 rather a descriptive, comprehensive term for a group of models that are very hard or impossible to interpret. However, a few categories of models can be named, which are mostly described as BAI. One of those categories is based on multidimensional space, such as support vector machines (SVM). Another category is neural networks, which are inspired by the mapping of brain functions.

A support vector machine is a supervised machine learning model that can be used for classification and regression tasks. It is effective in solving binary classification problems, where the goal is to separate data points into two classes. SVM aims to find an optimal hyperplane that divides the data points of different classes. It uses a technique called the kernel trick, which allows SVM to transform the input data into a higher-dimensional space, where the data becomes linearly separable even if it wasn鈥檛 in the original space.

聽

聽

SVM is often considered a black-box model because it provides a clear separation between classes, but understanding the specific features or combination of features that contribute to the decision is not understandable even for the experts who created the model. SVM has been successfully applied in various fields, including text classification and image recognition.

Neural networks, or artificial neural networks, are a class of machine learning models inspired by the structure of biological neural networks in the brain. They are composed of interconnected nodes, called artificial neurons or units, organized into layers. Neural networks are capable of learning and extracting patterns from data by adjusting the weights and biases of the connections between neurons. Neural networks can be highly complex and are often considered black box models due to their inherent opacity.

聽

The complexity and opacity of neural networks arise from several factors, including their architectures of hidden layers, high dimensionality of input features and nonlinear functions. Understanding the internal workings of a neural network, especially in deep neural networks with many layers, can be challenging because the relationship between the input features and the output is nonlinear and distributed across numerous parameters.

Neural networks are used for various tasks, including natural language processing, recommendation systems and speech recognition.聽

How do interpretability and explainability help to distinguish between white-box and black-box models?

The alternative approach to BAI is the white-box approach. The term white box is again rather a descriptive expression, collecting machine learning models that provide results that are, by model design, interpretable and explainable for experts. Interpretability and expandability are crucial to understanding the categorization of white-box and black-box models.

Interpretability refers to the ability to understand a model鈥檚 prediction depending on its input. Explainability means that one can explain in understandable terms to a human the models鈥?prediction. In white-box models, the logic of the model, the programming and the inner work of the model are explainable and interpretable.

Mostly simple linear regression models, such as decision trees, are mentioned as white-box models. Decision trees are hierarchical structures that use a sequence of binary decisions to classify outcomes based on their inputs. White-box models incorporate intrinsic interpretability, which means that there is no need for further models to interpret and explain them. However, they often have the disadvantage of low performance in the form or low accuracy.

In the case of BAI models, the model鈥檚 accuracy is mostly much higher. However, only post hoc interpretation is possible, which means that an additional explanatory model is required for understanding them. Due to the fact that black-box models produce a corresponding output based on a given input, the inner workings of the model are not known.

Based on the post hoc interpretability approach, in some sources, there are further categories described as black-box models. Depending on the task, their outputs are extremely challenging to interpret for humans and further models are required to understand their results.

As one of those categories, we could name probabilistic models, such as undirected Markov networks or directed acyclic Bayesian networks. Markov and Bayesian networks are both probabilistic graphical models that represent dependencies among variables.聽

They model complex systems with interconnected variables, where the probability distribution is influenced by the relationships between these variables. The logic of the representation is similar: In the graph, nodes represent variables, and edges represent relationships between the variables.聽

Can black-box and white-box AI work together?

Post hoc interpretability models incorporate black-box and white-box algorithms. Post hoc interpretability refers to the process of applying interpretability techniques to a trained machine-learning model. It is a retrospective analysis of the model鈥檚 decisions rather than an inherent property of the model itself. Post hoc interpretability methods aim to provide insights into how the model created the predictions, what features were important, and how those factors influenced the model鈥檚 output.

Post hoc interpretability models can be applied to different machine learning models regardless of their underlying algorithm or architecture. Commonly used post hoc interpretability techniques are feature-importance analysis, local explanations or surrogate models. Feature-importance analysis helps to determine what features contribute the most to the model鈥檚 predictions.聽

Local explanations provide explanations for individual predictions or instances to understand why the model made a specific prediction for a particular input. In surrogate models, interpretable models are built, such as decision trees, that approximate the behavior of the original model.

Post hoc interpretability models also can support model validation and model auditing. In model validation, post hoc interpretability helps to provide insights into the black-box model鈥檚 decision-making process, enabling a deeper understanding of its behavior and performance.聽

This information supports error analysis, bias assessment and generalization evaluation, helping to validate the model鈥檚 performance, identify potential limitations or biases, and ensure its reliability and robustness.

By gaining insights into the factors driving the black-box model鈥檚 predictions, model auditors can evaluate compliance with ethical standards, legal requirements and industry-specific guidelines. Post hoc interpretability techniques support the audit process by revealing potential biases, identifying sensitive attributes or variables and evaluating the model鈥檚 alignment with societal norms. This understanding enables auditors to assess risks, mitigate unintended consequences, and ensure responsible use of the black box model.聽

What are examples of black-box models?

Black-box models are extensively used in the development of autonomous vehicles. They have a critical role in various aspects of the vehicles鈥?operation. Deep neural networks, for example, enable perception, object detection and decision-making capabilities.聽

In the context of perception, black-box models process different sources of sensor data to understand the surrounding environment. They are able to identify objects and by analyzing the complex patterns in the data, the models provide a representation of the scene that serves as a foundation for adequate decision-making. Besides the core functions of the vehicles, there are also experiments related to the evaluation of risk factors.

The financial sector is another potential beneficiary of the black-box models. Neural networks and support vector machines are employed in financial markets for tasks such as stock price prediction, credit risk assessment, algorithmic trading or portfolio optimization.聽

These models capture complex patterns in financial data, enabling informed decision-making. Cryptocurrency trading bots are a type of product that can use black box AI to analyze market data and make trading decisions.聽

Trading bots can buy and sell cryptocurrencies automatically based on market trends and other factors. The algorithms used by the bots can be highly effective at generating profits, but they are complex and difficult to interpret. Also, they are not necessarily able to spot irregularities or market-moving news.

Another industry where black-box models are intensively used is the healthcare industry. Deep learning models gained significant traction in various healthcare applications due to their ability to process large amounts of data and extract complex patterns. Black-box models are employed in medical imaging analysis, such as radiology or pathology.聽

Convolutional neural networks are used for tasks like diagnosing diseases from X-ray or CT scans, detecting cancerous lesions or identifying abnormalities. Black-box models are also utilized in remote patient monitoring and wearable devices to analyze sensor data, such as heart rate, blood pressure or activity levels.聽

They can detect anomalies, predict health deterioration or provide personalized recommendations for maintaining well-being. However, their lack of transparency also raises serious concerns in the healthcare domain.聽

Written by Eleonóra Bassi

… [Trackback]

[…] Read More here on that Topic: x.superex.com/academys/beginner/2312/ […]

… [Trackback]

[…] Info on that Topic: x.superex.com/academys/beginner/2312/ […]

… [Trackback]

[…] Find More here to that Topic: x.superex.com/academys/beginner/2312/ […]

… [Trackback]

[…] Information to that Topic: x.superex.com/academys/beginner/2312/ […]

… [Trackback]

[…] Find More here to that Topic: x.superex.com/academys/beginner/2312/ […]

… [Trackback]

[…] Find More Information here to that Topic: x.superex.com/academys/beginner/2312/ […]

… [Trackback]

[…] Info to that Topic: x.superex.com/academys/beginner/2312/ […]

… [Trackback]

[…] Information on that Topic: x.superex.com/academys/beginner/2312/ […]

… [Trackback]

[…] Read More on that Topic: x.superex.com/academys/beginner/2312/ […]

… [Trackback]

[…] Information on that Topic: x.superex.com/academys/beginner/2312/ […]

… [Trackback]

[…] Here you can find 89042 more Information to that Topic: x.superex.com/academys/beginner/2312/ […]

… [Trackback]

[…] Read More on that Topic: x.superex.com/academys/beginner/2312/ […]

… [Trackback]

[…] There you can find 51166 additional Information to that Topic: x.superex.com/academys/beginner/2312/ […]

… [Trackback]

[…] Find More on on that Topic: x.superex.com/academys/beginner/2312/ […]

… [Trackback]

[…] Here you can find 92195 more Information on that Topic: x.superex.com/academys/beginner/2312/ […]

… [Trackback]

[…] Here you will find 94259 additional Information on that Topic: x.superex.com/academys/beginner/2312/ […]

… [Trackback]

[…] Find More Info here on that Topic: x.superex.com/academys/beginner/2312/ […]

… [Trackback]

[…] Read More to that Topic: x.superex.com/academys/beginner/2312/ […]

… [Trackback]

[…] Find More on on that Topic: x.superex.com/academys/beginner/2312/ […]