Computer vision applications in the metaverse

Computer vision stands at the intersection of artificial intelligence (AI), machine learning and computer graphics, revolutionizing how machines perceive and interact with the visual world. Computer vision is a field of artificial intelligence that trains machines to interpret, comprehend and replicate the human visual world.

Through the amalgamation of deep learning models and image processing techniques, computer vision empowers computers to replicate the human visual system. With the advent of AI, computer vision goes beyond image recognition, encompassing processes like object detection, pattern recognition and visual search.

By collecting and analyzing information from digital images and videos, AI helps computers define attributes, accurately identify objects, and classify them with precision.

This extensive processing equips machines with the ability to comprehend diverse visual content and react intelligently to what they “see.”

As the technology advances, computer vision promises to reshape industries, from healthcare to autonomous vehicles, open new frontiers in AI-driven innovation, and have the potential to create interesting use cases in tandem with other emerging technologies such as blockchain and the metaverse.

This article explores the rising field of computer vision, the technology behind it, its impact on metaverse applications, and enhances one’s understanding of its applications within the virtual worlds of the metaverse.

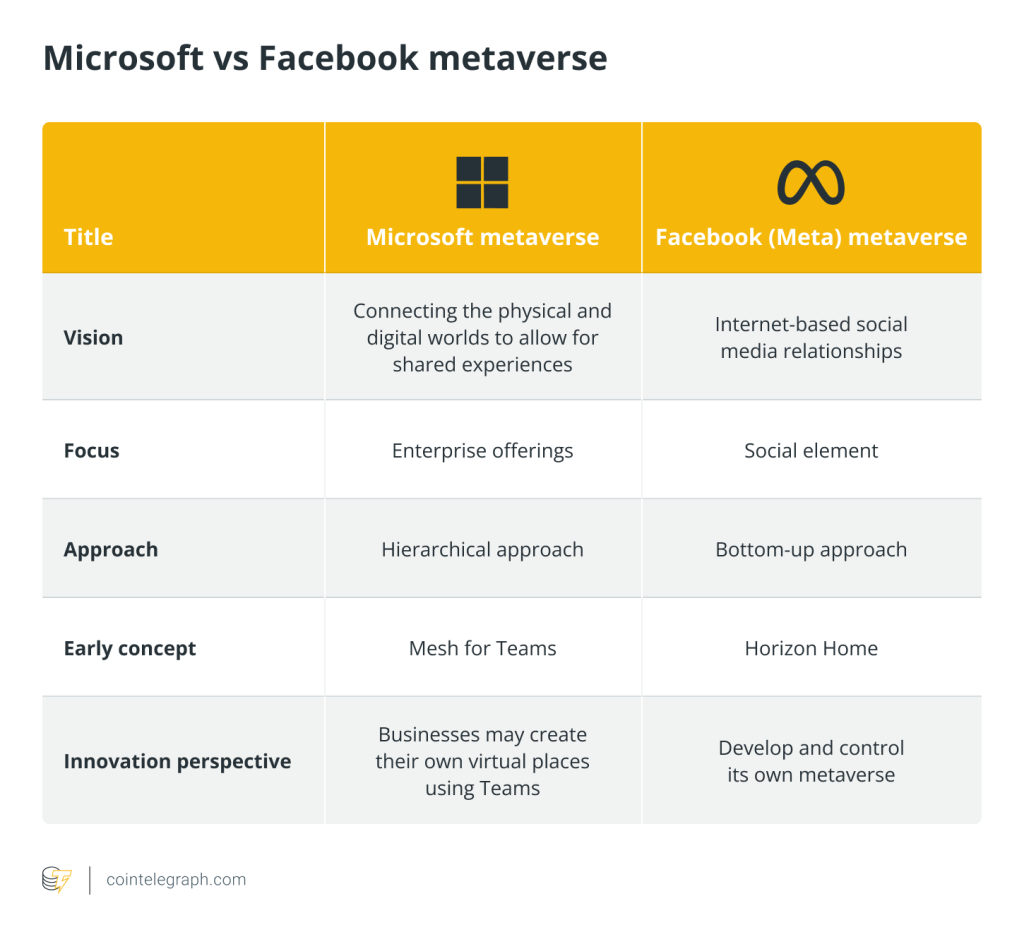

Related: Microsoft metaverse vs. Facebook metaverse: What’s the difference?

How are computer vision and metaverse interlinked?

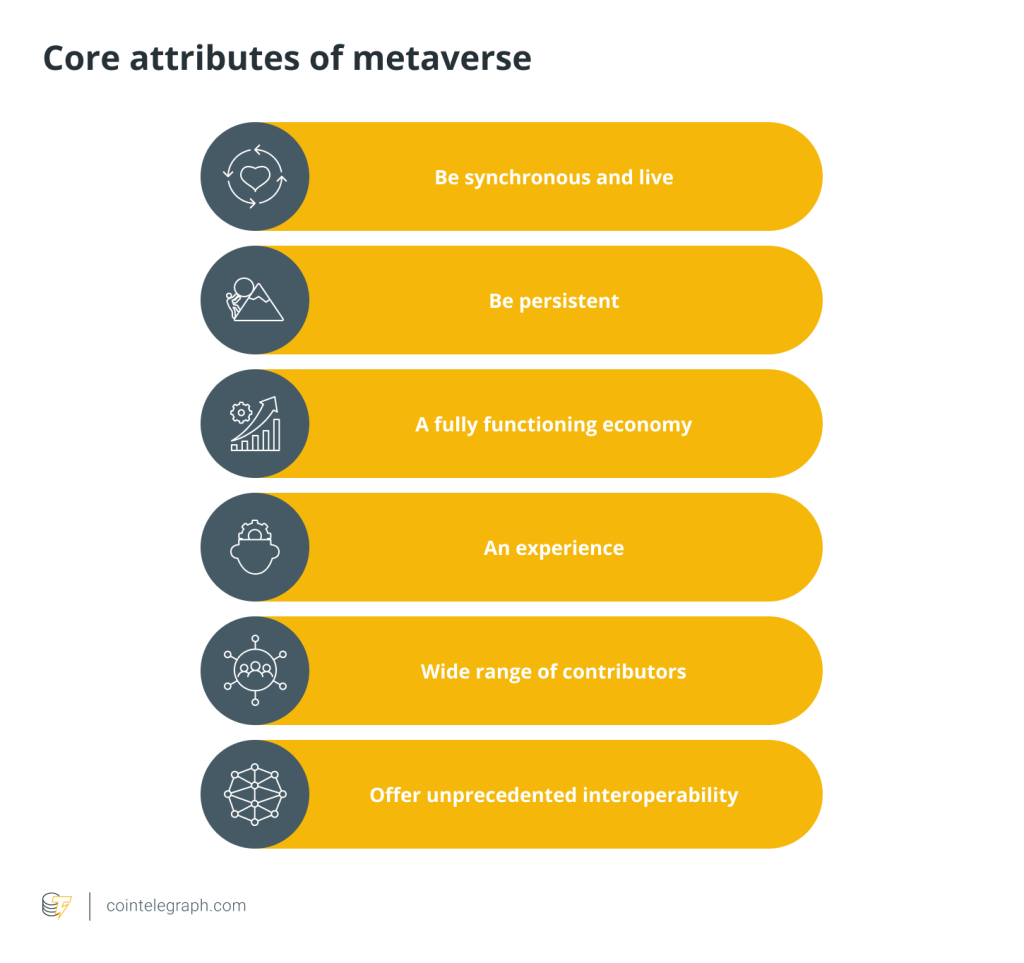

The metaverse is an immersive digital world. The concept of the metaverse, a digitized layer of life in the existing physical realm, was first suggested by Neal Stephenson in his 1992 novel Snow Crash. However, for decades thereafter, technology to turn the metaverse into real applicability was elusive.

The technologies upon which the metaverse relies, including augmented reality (AR) and virtual reality (VR), computer vision and personal devices, are today developing faster than ever before, opening the gates for the metaverse to be available and accessible. Visual information and computer vision play a vital role in processing, analyzing and understanding visuals as digital images or videos to derive meaningful decisions and take actions.

In the metaverse, computer vision algorithms are crucial for creating immersive virtual environments and enabling interactions within them. These algorithms facilitate real-time tracking of user’s movements, expressions and gestures, allowing for more natural and engaging interactions in virtual spaces. Computer vision, in extended reality (XR) applications, helps recreate the user environment in 3D.

Additionally, computer vision is instrumental in object recognition and scene understanding, which enhance the metaverse experience by providing context awareness and spatial orientation, enabling virtual objects and characters to react intelligently to their surroundings.

As the metaverse continues to evolve, computer vision will help enable more lifelike and interactive virtual worlds, opening up new possibilities for social, gaming, real-estate, educational and business applications.

How does computer vision work?

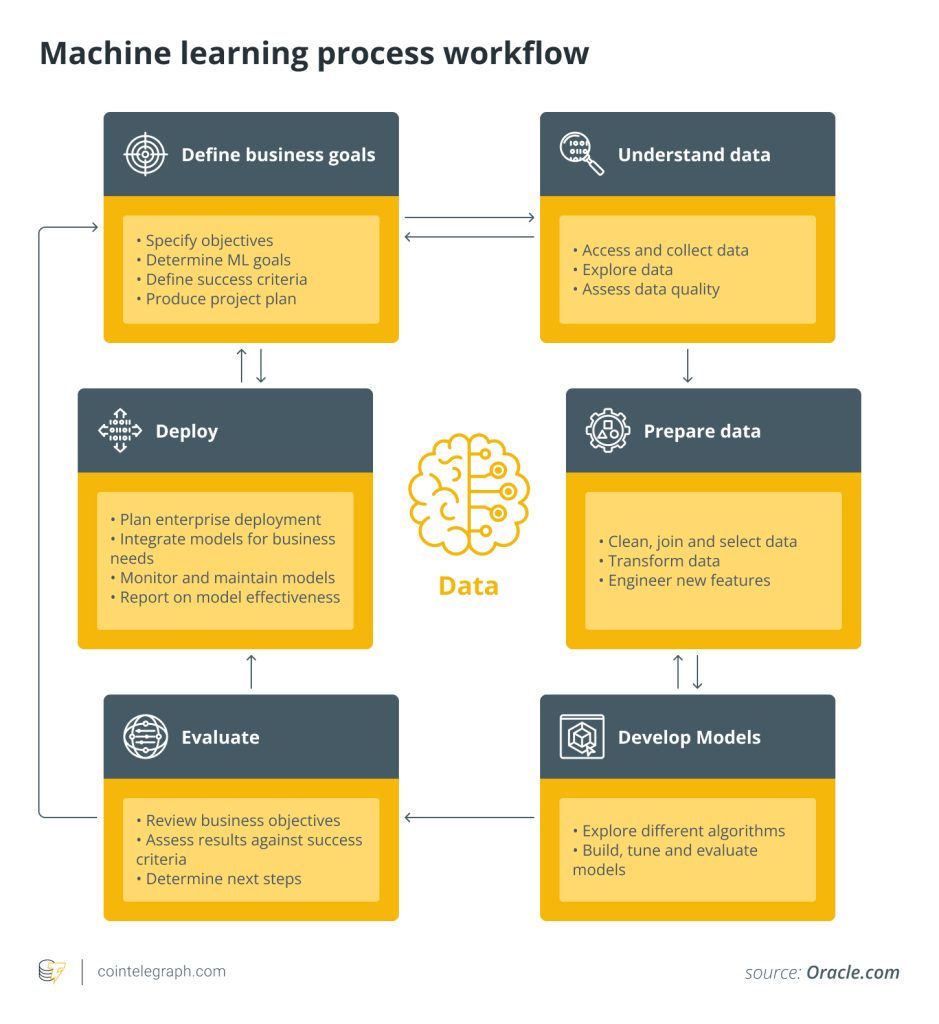

Computer vision relies on pattern recognition techniques, vast data pools, deep learning, AI, ML and sensory devices to enhance precision and speed. The computer vision algorithms are trained on massive amounts of visual data or images made available in the cloud.

Deep-learning enables computers to self-learn by using this data to recognize common patterns, and those patterns help determine the content of other images. Therefore, computer vision algorithms need access to a library of known patterns to compare with and are often trained by first being fed thousands of labeled or pre-identified images.

An image is identified in the following steps using computer vision:

Step 1: Image acquisition

It involves capturing data using devices such as cameras or medical imaging tools in various formats, including photos and videos.

Step 2: Image interpretation

The interpretation device analyzes images using pattern recognition to match content with known patterns, be they generic shapes or specific identifiers.

Step 3: Feature extraction

This involves identifying key elements like lines and shapes and segmenting components with vital information for further analysis.

Step 4: Pattern recognition

The features extracted in the previous step are interpreted and processed by employing sophisticated machine learning algorithms. These algorithms enable the computer to classify objects, recognize faces, track movements, and undertake other complex tasks.

These steps are usually automated, with the analysis of visual data impacting various industries and applications.

The role of computer vision in creating immersive metaverse environments

In a metaverse, computer vision creates a 3D user environment, tracks user direction and location, and represents users as avatars. An avatar has to have the ability to move seamlessly and interoperably.

Computer vision’s image processing ability is vital for a seamless connection between the metaverse and physical environments, ensuring high-quality 3D virtual world display even in adverse visual conditions like haze, low or high luminosity, or rainy weather.

Here are some ways in which computer vision plays a role in creating immersive metaverse environments and objects:

Avatar and gesture recognition

Computer vision systems can track a user’s facial expressions, body movements and gestures in real-time, allowing them to control and animate their avatars within the metaverse. This enhances social interactions by conveying non-verbal cues, making virtual conversations more lifelike.

Spatial awareness

Computer vision enables metaverse platforms to understand the physical space around users. This helps in adapting virtual elements to the real world, allowing for the seamless integration of digital objects and information within the user’s physical environment. Users can interact with virtual objects as if they were physically present.

Scene understanding

Computer vision algorithms can analyze the environment, identifying surfaces, obstacles and lighting conditions. This information can be used to render virtual objects more realistically, adjusting their appearance and behavior to match the real-world context.

Safety and moderation

Computer vision can be employed for safety and moderation purposes, identifying and mitigating inappropriate or harmful content and ensuring a safer and more enjoyable metaverse experience.

Realistic non-player characters (NPCs)

Computer vision can be used to make NPCs more responsive to the user’s actions and emotions. NPCs can recognize and react to the user’s expressions and gestures, enhancing the overall immersion in virtual worlds.

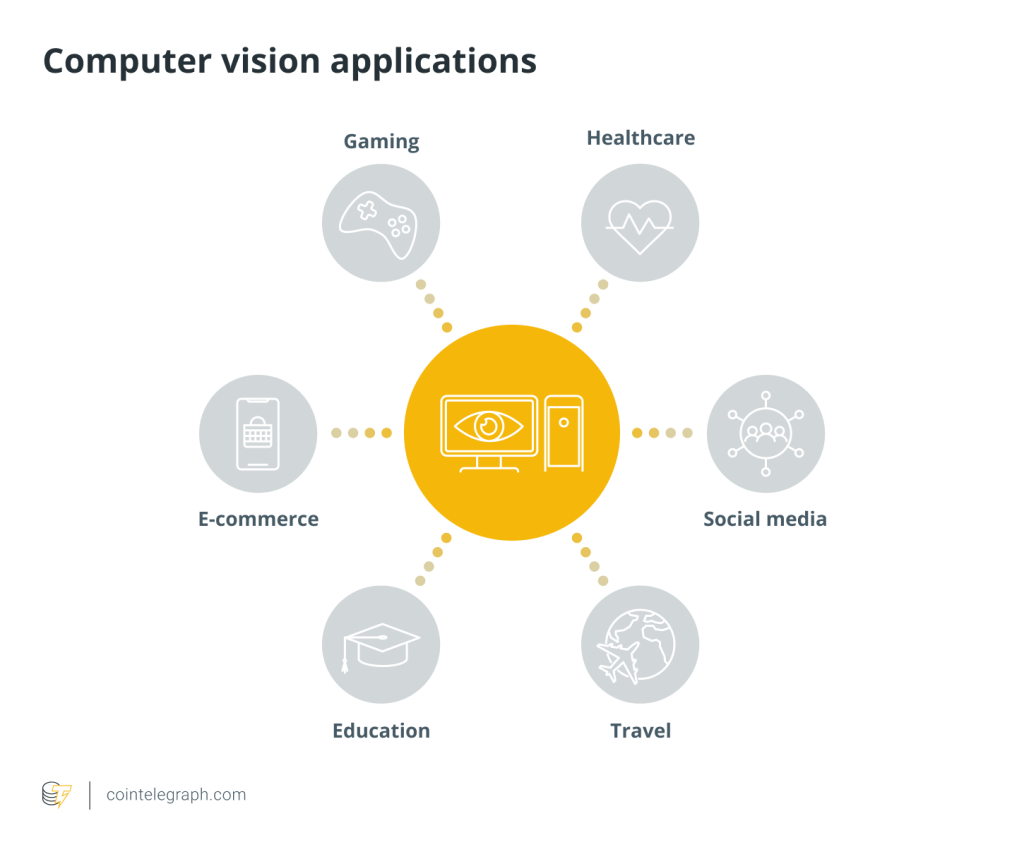

Harnessing the power of computer vision and metaverse in diverse industries

Computer vision, within the context of the metaverse, holds tremendous potential across various industries:

Gaming

Computer vision enables a more immersive game experience by tracking player movements, facial expressions and gestures and translating them into in-game actions. This enhances the realism and interactivity of virtual worlds, making gaming engaging. In-game object recognition and scene understanding enrich gameplay by introducing elements that interact intelligently with the player.

Related: A beginner’s guide to buying virtual real estate in Decentraland (MANA)

Social media

Computer vision plays a pivotal role in the metaverse by enhancing user interactions and content creation. It enables lifelike avatars that mirror user expressions and movements, making virtual interactions feel more authentic. Additionally, computer vision plays a pivotal role in content moderation by removing harmful images and videos and ensuring a safe and respectful online environment.

Travel

Immersive virtual tourism experiences allow users to explore tourist destinations and historical landmarks with sensory-rich experiences while enhancing accessibility for those with disabilities.

Education

Computer vision and the metaverse overcome challenges in the education sector by creating modules that enhance blended learning, language learning, competence-based education and inclusive education.

For instance, students can tour various geopolitical regions in the metaverse to comprehend the characteristics and setting of the places. The students can later design the place according to their preferences after creating the avatars based on the culture of their preferred locale.

Healthcare

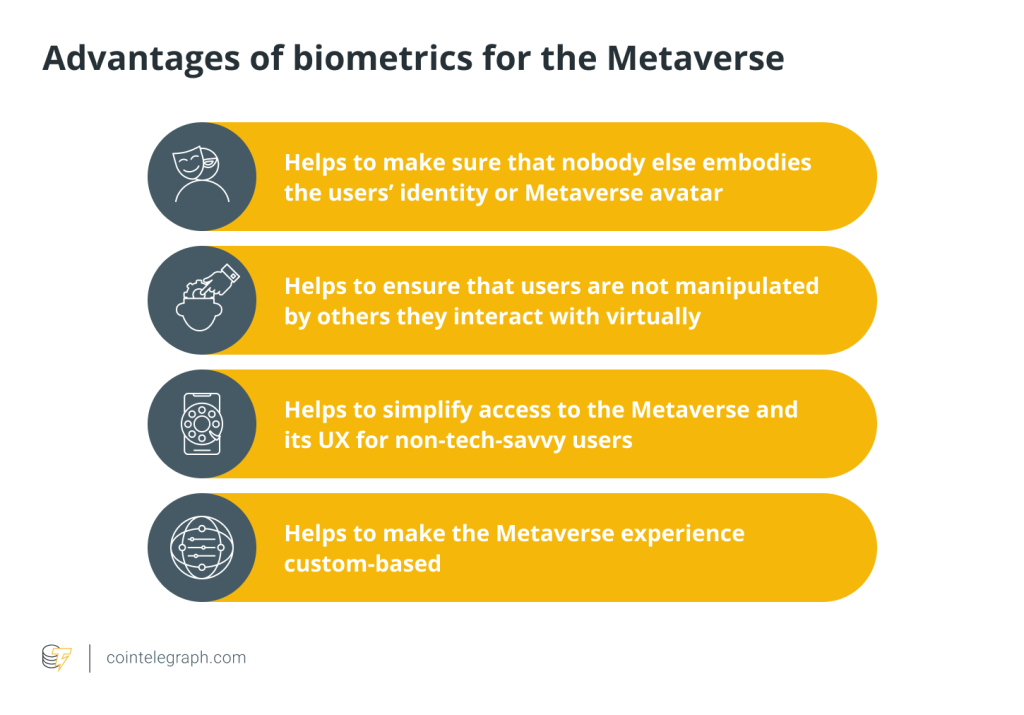

Computer vision, coupled with technologies such as biometrics and blockchain-enabled record keeping, is revolutionizing patient care and medical education. Virtual reality simulations in the metaverse can offer surgical trainees realistic training for complex medical procedures, improving their skills before they engage with real patients.

E-commerce

Metaverse-driven e-commerce is redefining the retail landscape, with virtual stores becoming increasingly popular. Brands like Nike and Gucci are partnering with platforms such as Roblox to offer digital shopping experiences, sometimes even surpassing the appeal of physical products. Furthermore, patents filed by companies like Walmart indicate the integration of cryptocurrency, nonfungible tokens and virtual goods into the metaverse shopping experience.

Computer vision challenges in the metaverse

Computer vision presents a set of significant challenges that span both technical and ethical dimensions. On the ethical front, privacy concerns loom large, especially in the context of vision-powered surveillance. The controversial use of face recognition and detection technologies has led to outright prohibitions in some regions due to these privacy concerns.

Computer vision, in the wrong hands, can be employed to create fake content, including images, videos or text, which can have far-reaching consequences, from spreading misinformation to damaging reputations.

Computer vision algorithms can inherit biases present in training data, leading to biased or unfair outcomes. Ensuring fairness, diversity and inclusivity in metaverse interactions is a critical challenge, as biased algorithms can perpetuate discrimination and inequality.

Achieving a high level of realism and immersion in the metaverse is a technical challenge. Any discrepancies or delays in user movements and gestures can break the illusion of presence and immersion.

In addressing these challenges, researchers and policymakers must strike a balance between harnessing the potential of computer vision for positive applications while safeguarding against its unintended consequences and ethical pitfalls.

Written by Shailey Singh

… [Trackback]

[…] Information on that Topic: x.superex.com/academys/beginner/2192/ […]

… [Trackback]

[…] Read More on that Topic: x.superex.com/academys/beginner/2192/ […]

… [Trackback]

[…] Find More on that Topic: x.superex.com/academys/beginner/2192/ […]

… [Trackback]

[…] Info on that Topic: x.superex.com/academys/beginner/2192/ […]

… [Trackback]

[…] Find More on that Topic: x.superex.com/academys/beginner/2192/ […]

… [Trackback]

[…] Info on that Topic: x.superex.com/academys/beginner/2192/ […]

… [Trackback]

[…] Read More on that Topic: x.superex.com/academys/beginner/2192/ […]

… [Trackback]

[…] Find More here to that Topic: x.superex.com/academys/beginner/2192/ […]

… [Trackback]

[…] Find More Info here on that Topic: x.superex.com/academys/beginner/2192/ […]

… [Trackback]

[…] Find More Information here to that Topic: x.superex.com/academys/beginner/2192/ […]

… [Trackback]

[…] Here you can find 9423 additional Information on that Topic: x.superex.com/academys/beginner/2192/ […]

… [Trackback]

[…] Read More to that Topic: x.superex.com/academys/beginner/2192/ […]

… [Trackback]

[…] Find More to that Topic: x.superex.com/academys/beginner/2192/ […]

… [Trackback]

[…] Information to that Topic: x.superex.com/academys/beginner/2192/ […]

… [Trackback]

[…] Here you will find 78525 additional Info on that Topic: x.superex.com/academys/beginner/2192/ […]

… [Trackback]

[…] Read More here on that Topic: x.superex.com/academys/beginner/2192/ […]

… [Trackback]

[…] Read More on that Topic: x.superex.com/academys/beginner/2192/ […]

… [Trackback]

[…] Read More here to that Topic: x.superex.com/academys/beginner/2192/ […]

… [Trackback]

[…] Info to that Topic: x.superex.com/academys/beginner/2192/ […]