New York Times lawsuit faces pushback from OpenAI over ethical AI practices

OpenAI said it views the NYT lawsuit as being “without merit” and listed its media partnerships and opt-out options for publishers, dismissing claims of misuse of information as “not typical or allowed.”

In a blog post on Jan. 8, OpenAI addressed a lawsuit brought against it by The New York Times (NYT), saying it is “without merit” and listed its collaborative efforts with various news organizations.

According to the blog post, OpenAI was in discussions with the NYT that appeared to be “progressing constructively.”

“Their lawsuit on December 27—which we learned about by reading The New York Times—came as a surprise and disappointment to us.”

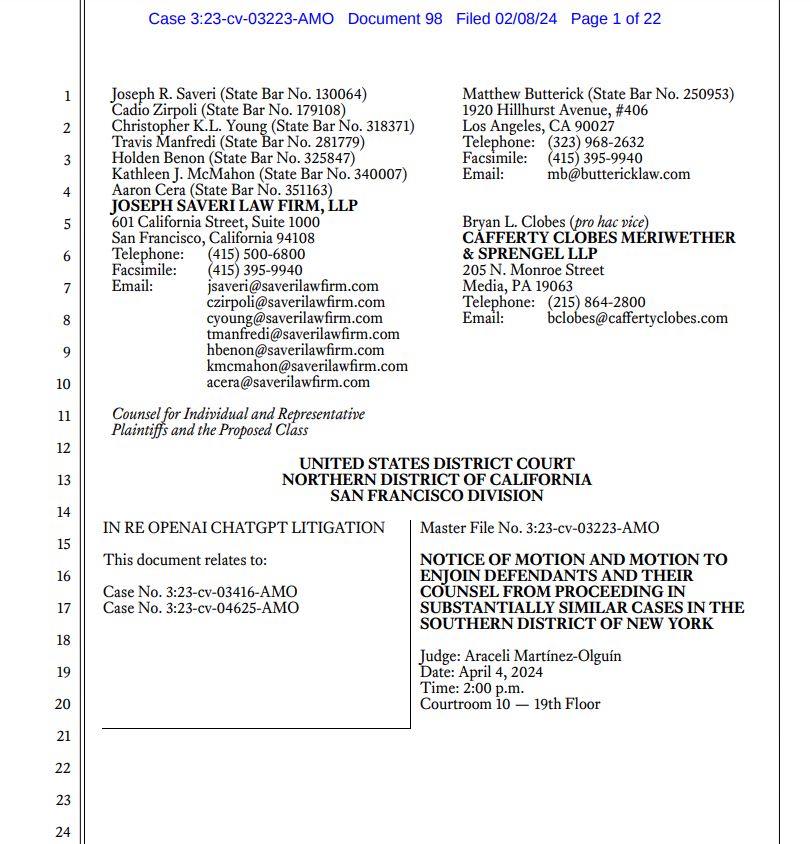

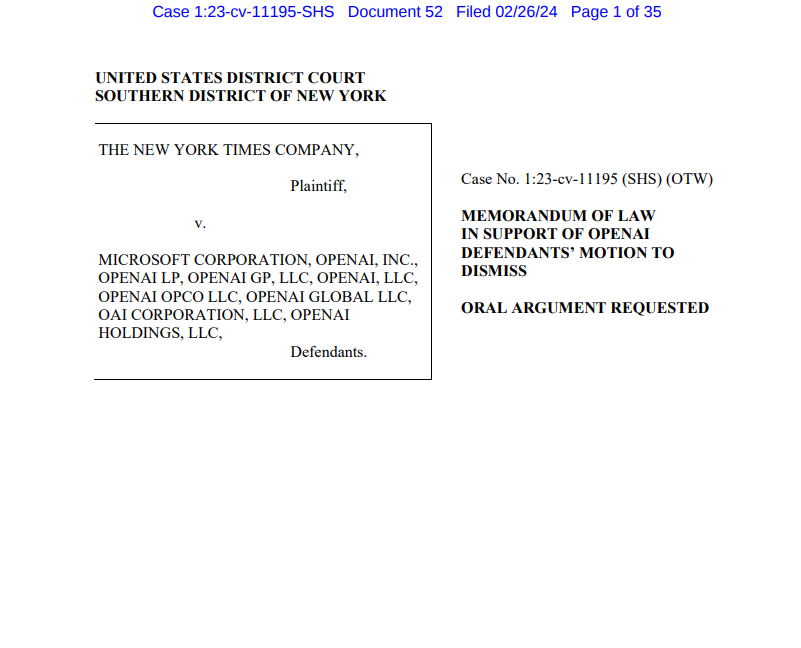

The NYT lawsuit against OpenAI and Microsoft alleges the unauthorized use of its content for training artificial intelligence (AI) chatbots. OpenAI’s rebuttal disagrees with the NYT claims and says it views this as a moment to “clarify our business, intent and how we build out the technology.”

It listed four claims on which it bases its arguments, the first of which is that it actively collaborates with news organizations and creates new opportunities for news.

It also said that its training is “fair use” but provides an “opt-out” because it is “the right thing to do.” Additionally, the AI developer claims that the “regurgitation” of content is a “rare bug” that is being fixed, and lastly, that the NYT is not telling the “full story.”

OpenAI named various partnerships in the media industry it has developed, such as a recent integration with German media giant Axel Springer to tackle AI “hallucinations.”

News/Media Alliance was also named as an organization it is liaising with to “explore opportunities, discuss their concerns, and provide solutions.”

Related: AI-generated news anchors to present personalized reports on Channel 1 AI in 2024

However, this comes after the News/Media Alliance published a 77-page paper on Oct. 30, accompanying a submission to the United States Copyright Office that says AI models have been trained on data sets that use significantly more content from news publishers than other sources.

OpenAI also highlighted the “opt-out process” it has implemented for publishers, which prevents its tools from accessing the websites of publishers who have employed it. It pointed out that The New York Times adopted it in August 2023.

A key argument in the NYT case against OpenAI and Microsoft is that the website “www.nytimes.com” is the most highly represented proprietary source, following only Wikipedia and a database of U.S. patent documents.

The NYT also claims it reached out to OpenAI and Microsoft in April of 2023 to raise concerns over intellectual property and “explore the possibility of an amicable resolution” to no avail.

Despite OpenAI’s rebuttal, lawyers have called the NYT’s case the “best case yet” that alleges that generative AI is committing copyright infringement.

OpenAI said any misuse claimed by the NYT would be “not typical or allowed user activity” and its content is not a “substitute for The New York Times.”

“Regardless, we are continually making our systems more resistant to adversarial attacks to regurgitate training data, and have already made much progress in our recent models.”

“We regard The New York Times’ lawsuit to be without merit,” the post concluded, “Still, we are hopeful for a constructive partnership with The New York Times and respect its long history…”

… [Trackback]

[…] Information on that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] Information on that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] Find More Info here to that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] Read More on to that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] Find More Info here on that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] Read More on that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] There you can find 80244 more Info to that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] Find More here to that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] Information to that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] Information to that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] There you will find 2999 additional Information to that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] Here you will find 29255 more Information on that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] Find More here on that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] Here you can find 87953 additional Information to that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] Find More here on that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] Read More here on that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] Read More on that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] Find More on that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] Read More Information here to that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] Info on that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] Here you can find 92749 additional Information to that Topic: x.superex.com/news/ai/1851/ […]

… [Trackback]

[…] Find More here on that Topic: x.superex.com/news/ai/1851/ […]