OpenAI has a ‘highly accurate’ tool to detect AI content, but no release plans

The company expressed worries that its detection system could somehow “stigmatize” the use of AI among non-English speakers.

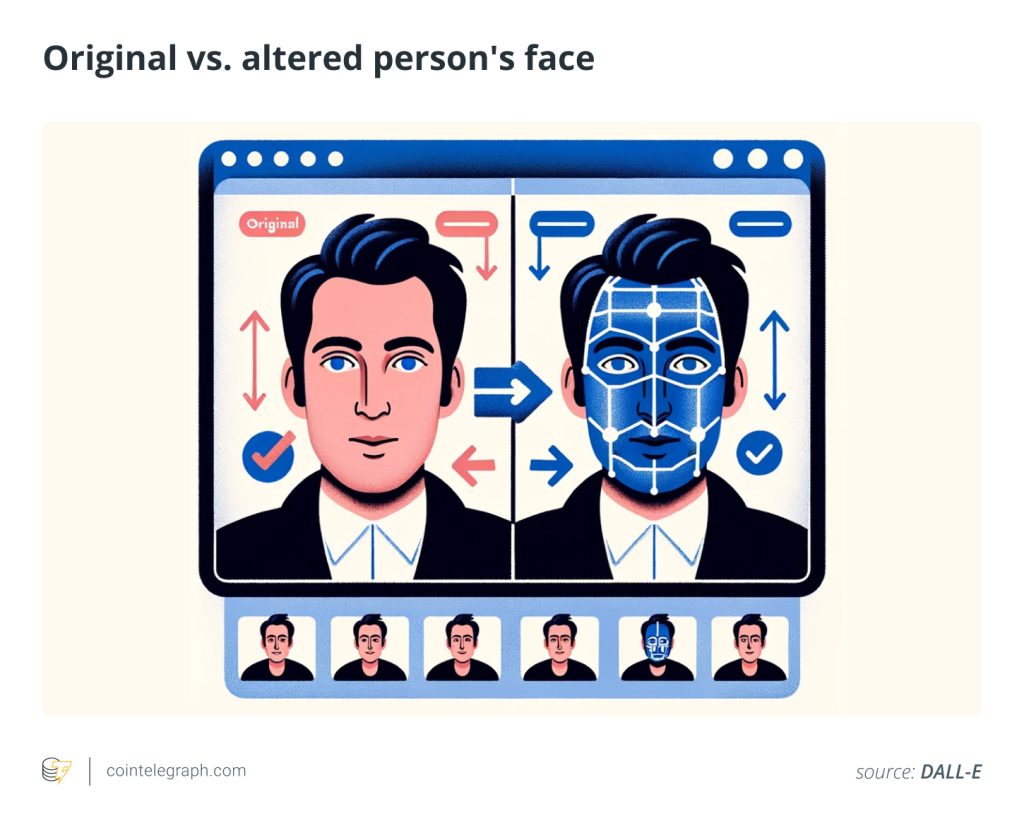

OpenAI appears to be holding back a new “highly accurate” tool capable of detecting content generated by ChatGPT over concerns that it could be tampered with or cause non-English users to avoid generating text with artificial intelligence models.

The company mentioned it was working on various methods to detect content generated specifically by its products in a blog post back in May. On Aug. 4, the Wall Street Journal published an exclusive report indicating that plans to release the tools had stalled over internal debates concerning the ramifications of their release.

In the wake of the WSJ’s report, OpenAI updated its May blog post with new information concerning the detection tools. The long and short of it is that there’s still no timetable for release, despite the company’s admonition that at least one tool for determining text provenance is “highly accurate and even effective against localized tampering.”

Unfortunately, the company claims that there are still methods by which bad actors could bypass the detection and, as such, it’s unwilling to release it to the public.

In another passage, the company seems to imply that non-English speakers could be “stigmatized” against using AI products to write because of an exploit related to translating English text to another language in order to bypass detection.

“Another important risk we are weighing is that our research suggests the text watermarking method has the potential to disproportionately impact some groups. For example, it could stigmatize use of AI as a useful writing tool for non-native English speakers.”

While there are currently a number of products and services available purported to detect AI-generated content, to the best of our knowledge, none have demonstrated a high degree of accuracy across general tasks in peer-reviewed research.

OpenAI’s would be the first internally developed system to rely on invisible watermarking and proprietary detection methods for content generated specifically by the company’s models.

Related: OpenAI’s current business model is ‘untenable’ — Report

Responses